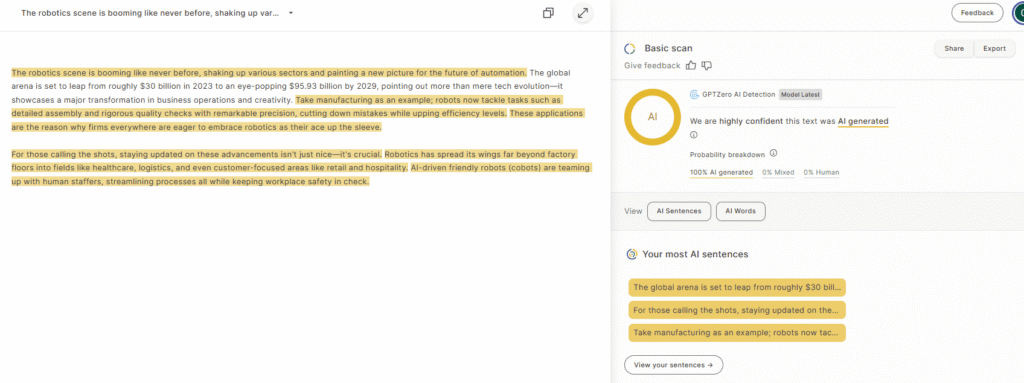

You generate a solid piece of AI-written content only to be flagged as “99% AI-generated.”

That result doesn’t necessarily mean your writing is bad. It simply means your text looks too predictable or too uniform to the AI detectors’ algorithms.

In this article, you’ll learn what perplexity and burstiness concretely are and how to improve it in your writing.

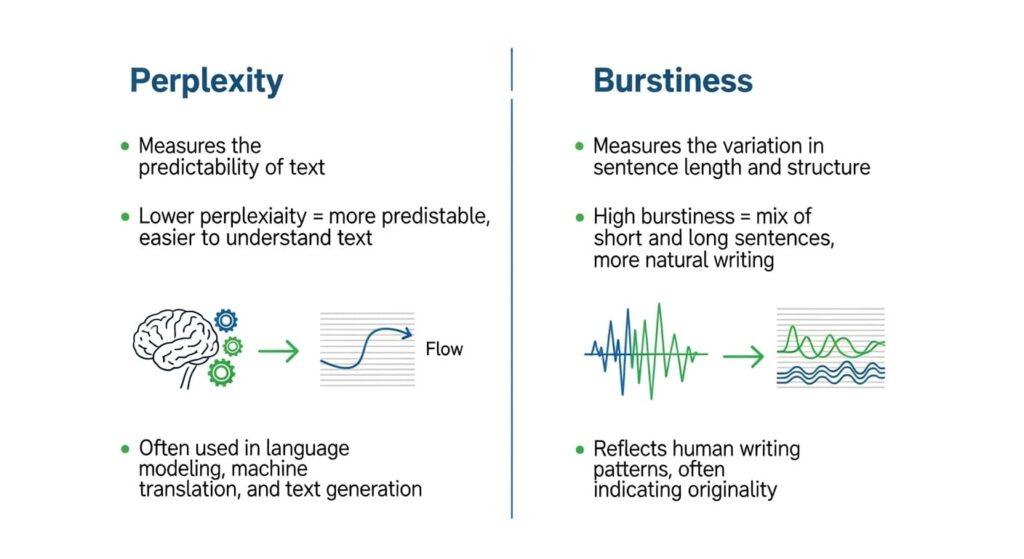

What Is Perplexity and Burstiness?

These metrics might sound technical, but you can grasp them in practical terms.

Perplexity

Perplexity is a measure of how well a language model predicts what comes next. In other words: given a chunk of text, how “surprised” would the model be by the next word?

- If the model can reliably guess the next word (it has seen many similar patterns), that’s low perplexity.

- If the next word is unusual, unexpected in context, that’s high perplexity.

Mathematically, perplexity is tied to probability and entropy: you sum up the negative log probabilities of the actual words (according to the model’s internal distribution), and take an exponent. A model that’s “good” on a dataset will assign high probabilities to real words, yielding low perplexity.

In practice:

- A sentence like “The cat sat on the mat” has relatively low perplexity, because those words and structure are common.

- A sentence like “Yesterday’s moonlight rippled through a Venetian crypt” would push perplexity higher—less predictable combination, less common structure.

Burstiness

If perplexity is about word-level surprise, burstiness is about how that surprise (and writing patterns) vary across a text.

Humans tend to “burst”: we mix short and long sentences, change rhythm, shift structure. We don’t write in one uniform pattern. In a paragraph, we might have one punchy line, then a more elaborate thought, then a variation. That fluctuation is burstiness.

AI systems, by contrast, often lean toward more uniform generation: sentence lengths and complexity tend to stay in a narrower band. That uniformity reduces burstiness.

When detectors measure burstiness, they often look at how the perplexity scores (or other internal “predictability” measures) vary sentence to sentence. If variation is low (i.e. each sentence is similarly predictable), the text is less bursty. If variation is higher—some sentences spike in unpredictability, others are tame—that signals burstiness.

They might also factor in variation in sentence lengths, structural complexity, clauses, subclauses, and so on. The more you mix your sentence styles, the more bursty your text becomes.

How Do AI Detectors Measure Perplexity & Burstiness?

To demystify how AI detectors, let’s dig into their typical workflow around perplexity and burstiness.

Perplexity Measurement in Detectors

Detectors start by asking: “Given this text, how well would a language model predict each word?” That’s the core of perplexity.

- A detector often uses a language model (sometimes the same kind of model as text generators, or a smaller open model) to compute token probabilities. For each word or token in your text, the detector calculates how likely that word is, given the preceding context.

- It sums up—or more precisely, aggregates—the negative log probabilities (or uses a variant) across tokens, then exponentiates to derive a perplexity score. A lower average perplexity means the model found the text more predictable.

- Some detectors compute perplexity at the sentence level—so each sentence has its perplexity—and then derive statistics (mean, variance) across the document.

- GPTZero, for instance, uses a statistical layer combining perplexity (per sentence) and burstiness, interpreting perplexity per sentence as “how likely an AI model would choose exactly those words in that sentence.”

- Detectors often have internal thresholds or ranges: a certain perplexity value (or zone) is more “human-likely,” another is more “AI-likely.” But there’s no universal scale—perplexity depends heavily on which language model they use.

Burstiness Measurement in Detectors

- After computing sentence-level perplexities (or per-chunk scores), detectors assess variance or fluctuation in those scores. If all sentences cluster around similar perplexity, that suggests low burstiness. If some sentences are much more “surprising” than others, that indicates higher burstiness.

- Simply put: burstiness measures how uneven the text is in terms of style, complexity, word choice unpredictability.

- Some detectors also use more superficial proxies: variation in sentence length, complexity (number of clauses), syntactic structure shifts. If every sentence is nearly the same length and structure, that’s a sign of uniformity (low burstiness).

- Burstiness calculation might include statistical measures like standard deviation, coefficient of variation, or percentile spreads of perplexity scores across sentences.

How to Improve Perplexity & Burstiness of Your AI Text

Here are four actionable techniques you can use to make your text more naturally perplex & burst.

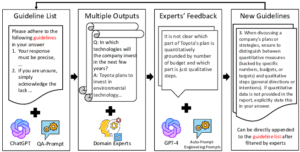

3.1 Add More Information in Your Prompt (Richer Context & Constraints)

One way to nudge the AI toward higher quality perplexity and burstiness is by giving it more context, examples, constraints, or nuance in your prompt. When the model “knows more,” it’s less likely to overfit to bland or uniform phrasing.

- Include background, nuance, or edge cases in your prompt. The more details you supply, the more room the model has to vary its output.

- Use few-shot in the prompt with examples that themselves have variation (some short, some long, with varying complexity). These examples “teach” the pattern you want.

Outline Your Text Before Generating It

Instead of asking the AI to “write a 1,000-word article about X,” break the work into two steps:

- Ask for an outline or structure first (section headings, bullet points).

- Then ask it to expand each section, with instructions to vary sentence length, tone, complexity.

This two-step approach gives you control over macro structure and ensures each section can carry burstiness. If you ask the model to produce everything in one shot, it may default to safer, more uniform patterns.

Diversify AI Formulations & Vocabulary

If your AI always picks the most probable words or phrasing, it lowers perplexity too much and flattens the style. To prevent that:

- Find synonym variation

- Ask for alternate phrasings: e.g. “Rewrite one sentence in a different style, or add a parenthetical variation.”

- Use controlled randomness: include instructions like “once in the piece, take a stylistic detour (e.g. rhetorical question, idiom, anecdote).”

- Use diverse prompts: split your original prompt into several versions with alternate wording, feed them separately, and then combine outputs.

Diversify AI Writing Structure (Sentence & Paragraph Variation)

To boost burstiness, you need variation in structure — not just vocabulary. Here’s how:

- Alternate sentence lengths: mix short, punchy sentences with long, complex ones.

- Change sentence openings: sometimes lead with clauses (“When we consider …”), sometimes with adverbs (“Quickly, …”), sometimes with questions or asides.

- Vary sentence types: declarative, interrogative, exclamatory, conditional, parenthetical.

- Use rhetorical devices: lists, bullets, parentheses, rhetorical questions, analogies sprinkled in – they break monotony.

- Split or merge sentences: after the AI generates, identify sentences that are too long or too uniform, and break them or combine them strategically.

- Paragraph-level shifts: alternate between dense explanation paragraphs and lighter narrative or example parts.

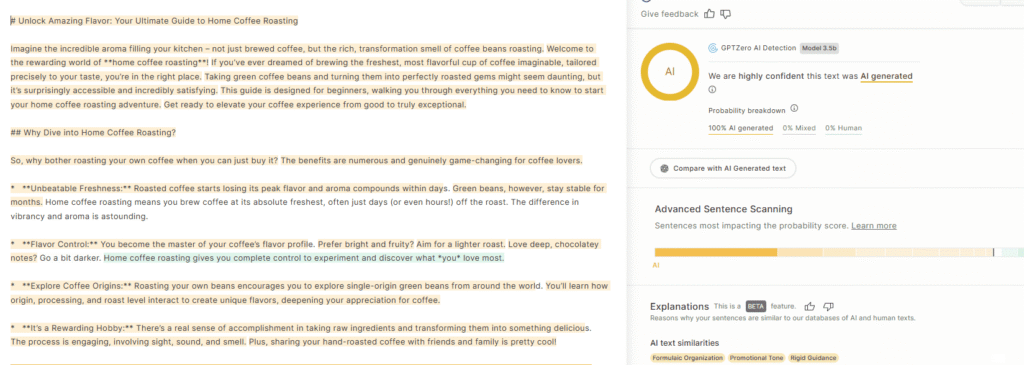

Examples of Low & High Perplexity / Burstiness Texts

Looking at concrete examples is the most effective way to see how perplexity and burstiness show up in writing.

4.1 Low Perplexity + Low Burstiness (Very “AI-Looking”)

Example:

The company offers a product that helps users manage tasks. It has features for reminders, collaboration, and scheduling. Users can log in securely, share files, and receive notifications. The interface is intuitive and efficient. The product aims to improve productivity and organization.

Why this is low/low:

- Perplexity is low because almost every word and phrase is common, predictable in business content.

- Burstiness is low because sentences are of similar length and structure (each begins similarly: “The …”, “Users …”, “It …”). There’s no variation or sudden shift.

- Detectors might flag such text because it lacks “spikes” in unpredictability or variety.

4.2 High Perplexity + High Burstiness (Very Human-Like)

Example:

When GTP-3 was released, people tried it through AI-writing services like Jasper or Copy AI. They fall victim -like me- to the same hype cycle brought by new technological innovation. Sounds very exciting at first, but redundant when further tested.

And that’s approximately what you could say about GPT-3 in retrospect. As impressive as GPT-3 output was, there wasn’t much to take from it from a professional standpoint. GPT-3 writing was inconsistent, shared made-up facts, names or numbers, and couldn’t even make an argument more than 1 sentence long. In short, there wasn’t really anything to worry about.

Why this is high/high:

- Perplexity is higher because there are more concrete details, less probable vocabularies and sentence sequences.

- Burstiness is high because you see variation: one sentence is short, another is longer and descriptive, then a question, then a movement sentence. Rhythm changes.