Originality.ai has become a tool widely talked about. It promises to tell you whether a piece of writing looks AI-generated.

But it’s not perfect, and if you misunderstand how it works, you risk overreacting to the numbers.

In this review, you’ll get a clear, practical look at whether Originality.ai deserves a place in your workflow.

Try Out Originality.AI Detection For Free Here

How the Originality.ai Detection Algorithm Works

Originality.ai describes its approach using a model structure inspired by architectures like ELECTRA: a generator model simulates text-generation, while a discriminator model learns to distinguish the generated text from real human text.

- They begin by gathering large-scale datasets: millions of human-written text samples from diverse sources, and millions of samples of AI-generated text across multiple models.

- The generator produces varied AI-style text (using techniques like nucleus sampling and temperature variation) making stealthier outputs; the discriminator is then trained on pairs of human vs AI text to learn the boundary.

- Fine-tuning continues with newer models as they appear, so the system evolves as AI writing improves.

*Feature extraction: text-analysis and pattern recognition

Once the model is trained, Originality.ai extracts features from an uploaded text to estimate the probability of “AI-generated” vs “human-written”. Key signals include:

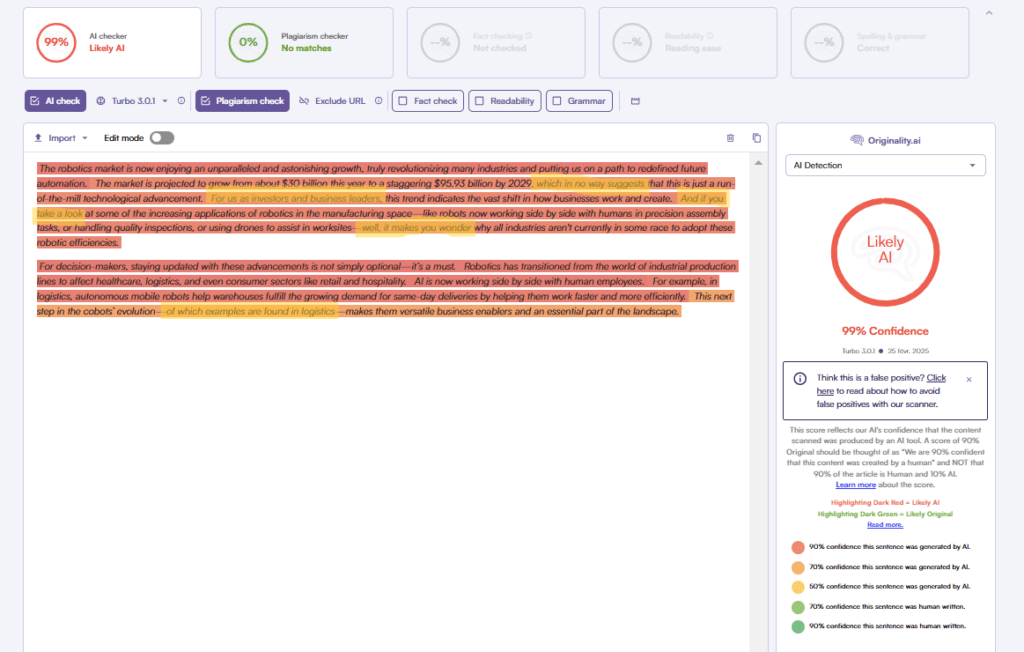

- Token-level and sentence-level patterns: AI-generated text often exhibits more uniform sentence lengths, consistent vocabulary and fewer unusual phrases. Originality.ai highlights that their model uses sentence-level “dark-red” highlights for high-risk segments.

- Lexical diversity and syntactic variation: Human authors typically vary sentence length, include colloquialisms or slight errors; AI often writes “too clean” or “balanced”. Originality.ai looks for that burstiness or lack thereof.

- Semantic/structural coherence: The tool checks whether the text flows naturally, uses transitions in a human-like way, and is less formulaic. The more strongly it resembles known AI patterns, the higher the “AI likelihood” score.

- Adversarial robustness signals: The model is trained on paraphrased, re-written, or disguised AI texts, so it can detect moderately masked AI content—saying the authors intentionally built training on “diversified sampling from different models and generation methods”.

Originality.ai accuracy on the RAID benchmark

If you’re going to pay for an AI detector, you need more than marketing claims. That’s exactly what the RAID benchmark helps you understand for Originality.ai

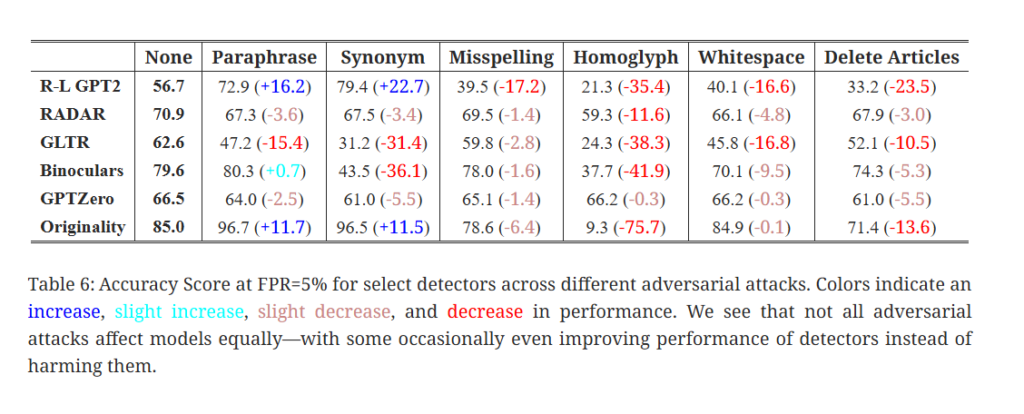

RAID (“Robust AI Detection”) is a large, independent benchmark built by researchers from Penn, UCL, King’s College London and CMU to stress-test AI detectors. It includes:

- Over 6 million texts

- 11 different LLMs (ChatGPT, GPT-4, Llama, etc.)

- 8 domains (news, books, abstracts, Reddit, Wikipedia, etc.)

- 11 adversarial attacks (paraphrasing, misspellings, homoglyph tricks, etc.)

Accuracy rate: how often does Originality.ai catch AI text?

On RAID, Originality.ai’s detector:

- Achieves ~85% accuracy on the base dataset (standard AI vs human texts) at a 5% false-positive threshold.

- Achieves ~96.7% accuracy on paraphrased AI content, far above the average of other detectors (around ~59% in one synthesis of RAID results).

- Delivers an overall average of ~85% across all 11 models, which was the highest composite score among the evaluated detectors.

Robustness to adversarial attacks

RAID is not just a simple “clean” dataset; it deliberately tests how detectors behave when someone tries to hide AI use. Here, Originality.ai does unusually well:

- It ranks 1st in 9 out of 11 adversarial attack tests, and 2nd in another, according to both Originality’s own summary and independent synthesis (including our Intellectualead review).

- Across the 8 content domains, it ranks 1st in 5 of them.

- On paraphrased content, it maintains 96.7% accuracy, while many other detectors fall sharply.

False-positive rates: how often does it wrongly accuse humans?

RAID normalises detectors at 5% false-positive rate, meaning each tool is tuned so that about 1 in 20 human texts would be misclassified as AI on the benchmark.

Other peer-reviewed work in academic settings has found similar patterns: high sensitivity (catching most AI) with specificities often around 95–98%, which corresponds to 2–5% false positives depending on the threshold chosen.

Originality.ai Pricing

Originality.ai offers a mix of subscription plans, pay-as-you-go credits, and enterprise tiering. This flexibility means you can match cost to your actual usage and team size.

- Subscription (Pro) Plan: For individual users or small teams who check regularly, the Pro plan is listed at US $14.95/month for 2,000 monthly credits. When billed yearly, this drops to US $12.95/month (≈ US $155.40/year).

- Pay-As-You-Go Plan: For irregular users, there’s a credits-only model. One review cites US $0.01 per 100 words — meaning each credit covers 100 words of scanning for AI/plagiarism.

- Enterprise Plan: For agencies, large publishers or teams needing API access, bulk credits, site-wide scans and team management, Originality.ai lists an Enterprise tier at US $136.58/month (when billed annually) covering 15,000 credits/month and full enterprise features.

What Does a “Credit” Cover?

- With the subscription plan, you get 2,000 credits/month. One credit typically counts as 100 words scanned via the system when checking for AI generation or plagiarism

- If you’re uploading large documents (e.g., 3,000 words), you’d use approximately 30 credits.

- In pay-as-you-go mode, you buy credits as needed — useful if you only occasionally run checks. One review gives example credit packages: 2,000 credits for US $20, 10,000 credits for US $100, up to 50,000 credits for US $500.

Is Originality.ai Really Worth It?

Short answer: yes, if you treat it as a smart assistant—not as a judge and jury.

Originality.ai can genuinely help you in two big ways:

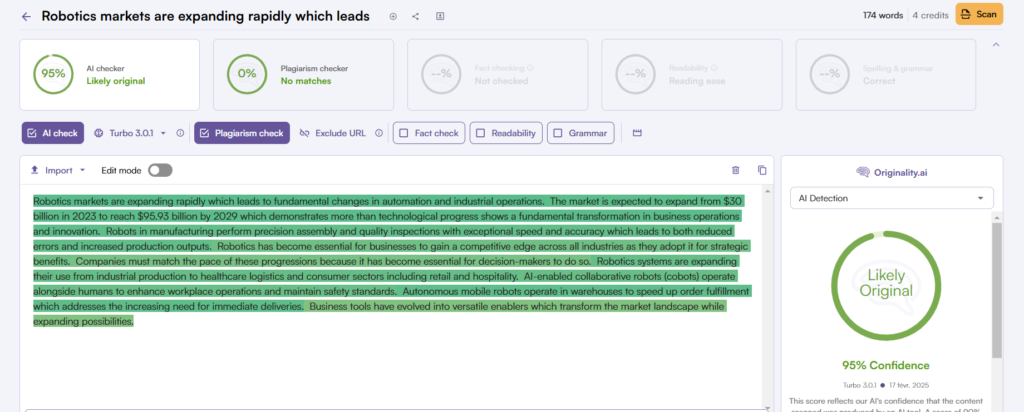

- Making your own content more human and trustworthy.

- Giving you signals that AI was likely used in a text you’re reviewing.

Here’s what it can realistically do:

- Give you a probability score that a text is AI-generated.

- Highlight specific sentences or sections that exhibit AI-like patterns.

- Combine that with plagiarism results to show if someone copied or lazily rephrased existing content.

This is enough to say:

“This draft looks heavily AI-generated; I should review it more closely, ask questions, and maybe request a rewrite.”

It is not enough to say:

“Because Originality.ai says 92% AI, I can prove you cheated / lied / plagiarised.”

Even on strong benchmarks like RAID, Originality.ai is still a probabilistic model: it can be very good, but not perfect. That means:

- Some AI-assisted texts will slip through as “human”.

- Some legitimately human texts (especially very clean, well-structured ones) may occasionally be flagged as AI.

So you should treat the results as:

- Signals, not verdicts.

- A reason to dig deeper, not a reason to jump to accusations.