Mistral is the French AI startup that almost stand shoulder-to-shoulder with the world’s biggest labs.

But is it actually good? Can Mistral models really compete with the latest frontier models ?

This article takes you straight into those questions.

How good are Mistral models compared to frontier models?

Mistral confirmed Medium 3.1 in August 2025 release and positioned it as a frontier-class, multimodal model aimed at enterprise deployment (cloud, hybrid, or in-VPC).

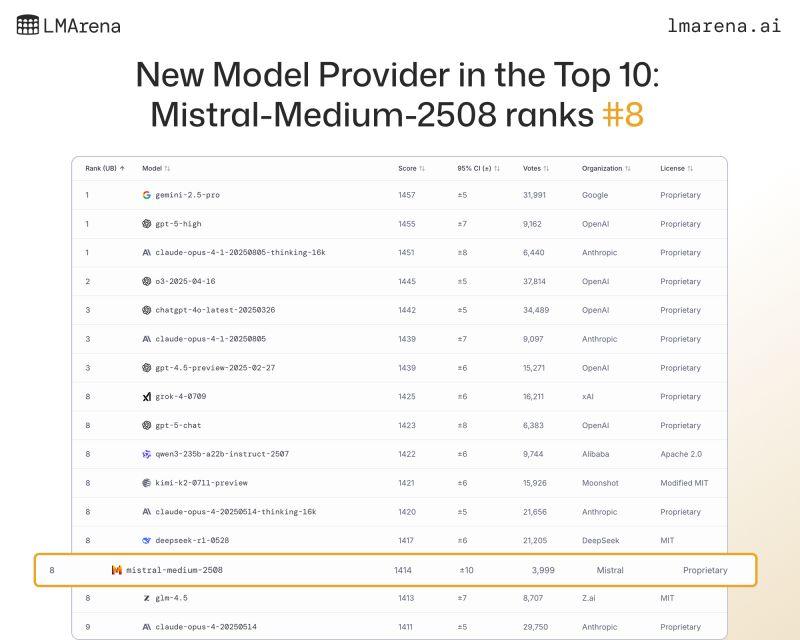

If you judge models the way real users do (side-by-side, blind), Chatbot Arena (LMSYS) is still the most trusted signal. On the official Text Arena leaderboard (overall, default settings), Mistral Medium 3.1 appears as “mistral-medium-2508” and currently sits around #18 with a score ≈ 1406 and ≈ 23.8k votes (last update: Oct 16, 2025). The very top slots are dominated by Gemini 2.5 Pro, Claude Sonnet/Opus, and the latest OpenAI models.

That places Mistral Medium 3.1 in the top 20 club when judged by broad human preference across tasks.

On OpenRouter’s usage leaderboard, which reflects what developers actually run, Mistral as an organization holds roughly #9 by lifetime token share (~2.0%), behind xAI, Google, Anthropic, OpenAI and others. In the model-level “top” lists, Mistral Medium 3.1 doesn’t appear in the overall top-10 by tokens at the moment.

Beyond crowdsourced preference and usage, you should also look at independent benchmarks that stress knowledge and multimodality. Mistral Medium 3.1 is at 74.4% on MMLU-Pro (a tougher successor to classic MMLU) and ~63% on MMMU (multimodal reasoning).

So Mistral might not surpass the frontier models of OpenAI, Google and Anthropic, but its latest models are still solid choice for professionnals. They consistently rank among the best open-source LLM models. They are also very cost friendly compared to these frontier models.

What are the features of Mistral’s chatbot (Le Chat)?

Le Chat is Mistral’s assistant for day-to-day work. It is available to individuals and as Le Chat Enterprise for teams. Here is what you can actually do with it and how each feature helps your workflow.

1) Work with focused “agents,” collaborate in a live canvas, and share chats

Inside Le Chat you can pick or build agents tuned for common tasks. You can also switch to Canvas, an interactive editor where you co-write and iterate with the model in real time. When you need to hand work to a teammate, use Chat Sharing to pass along context without copy-pasting. These are first-party features documented in Mistral’s help center.

2) Attach files for real document and image understanding

You can drop large PDFs and images into a chat and ask for summaries, table extraction, figure interpretation, or quick comparisons. This is not a hack; Mistral ships it as a core capability and calls out graphs, tables, diagrams, formulae, and equations as supported content types. In practice, that means faster policy reviews, RFP analysis, or API spec digestion.

3) Analyze data with a built-in code interpreter

Le Chat includes a Code interpreter for data work. You can upload CSVs or spreadsheets, clean and transform them, run basic statistics, and generate visualizations inside the session. If you publish technical or product content, this is an easy way to ground your draft in real numbers before you write.

4) Keep long-running context with “Memories”

Le Chat’s Memories feature lets the assistant remember prior preferences and references. Mistral highlights user control over what is stored and claims a much larger memory capacity for paid users compared with competitors. This matters when you want the model to remember voice rules, glossary terms, or a dataset you keep revisiting.

5) Connect to the tools you already use

Mistral has added Connection Partners that let Le Chat pull from and act on external systems. Current examples include Atlassian, GitHub, Stripe, and Box, with Salesforce, Snowflake, and Databricks listed as “coming soon.” Use this to answer from internal docs, raise tickets, or draft updates with real source material.

6) Enterprise controls: SSO, RBAC, audit logs, API keys

If you run Le Chat Enterprise, you get the guardrails your IT team expects. SSO (SAML) with common identity providers, role-based access control, audit logs, and API key management are all documented features. That gives you authentication, permissioning, and traceability without building custom wrappers.

7) Deployment and data residency

Mistral positions Le Chat Enterprise as private and customizable. You can deploy in the cloud, in a private VPC, or self-hosted. By default, data is hosted in the European Union, with options to use a US endpoint and documented subprocessors and safeguards where needed. For teams with sovereignty or compliance needs, this is a clear benefit.

8) Research modes and steady feature updates

Le Chat has seen frequent upgrades. A mid-2025 release added a deep research mode plus improvements for multilingual reasoning and image editing, which signals that Mistral is closing gaps with larger rivals. If your work spans discovery, note-taking, and synthesis before writing, this mode is worth testing.

Mistral Sovereignty : its main selling point

who controls data, and under what regulation it’s processed matters more than ever. Mistral positions itself strongly in this domain:

- By default, your organisation’s data (for Le Chat) is hosted in the European Union.

- If you use a US endpoint explicitly, then the data is hosted in the US—but that choice is documented.

- Mistral promotes support for on-premises, private cloud (VPC) or hybrid deployments for its enterprise customers. That means you can deploy inside your own infrastructure or trusted jurisdiction.

- As part of its “AI for Citizens” initiative, Mistral highlights data sovereignty, deployment control, and local infrastructure as core pillars of its offering to governments and regulated industries.

In practice, that means :

- Data residency (ensuring your content + user data stays in appropriate jurisdiction)

- Audit & compliance (you can meet GDPR, EU AI Act, other regional requirements)

- Risk of vendor lock-in (you can avoid being stuck in a proprietary cloud)

- Trust with stakeholders (your stakeholders may care where their data is processed)

Mistral Pricing

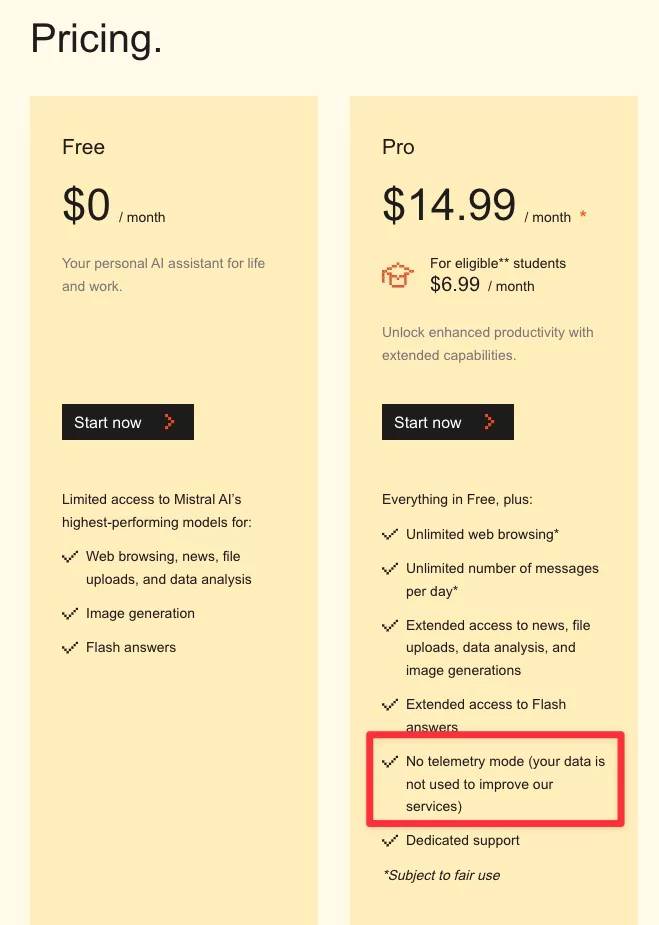

Chatbot (Le Chat) Subscription Pricing

Mistral offers a tiered subscription model for its Le Chat service:

- Free Tier — $0/month. You gain access to the core assistant, “highest-performing models” according to Mistral, and you’re good to go for personal use.

- Pro Tier — $14.99/month. This unlocks “enhanced productivity” and “agentic capabilities” such as higher usage limits, file uploads, etc.

- Team Tier — ~$24.99/user/month (or annual equivalent ~$19.99) for collaborative features, integrations and higher quotas.

- Enterprise Tier — Custom pricing. Enterprise deployment, dedicated infrastructure or on-premises variants. You’ll need to contact Mistral for a quote.

They also offer student discounts for the Pro plan (more than 50% off) if you verify an educational email.

API / Model Usage Pricing

If your workflow involves integrating Mistral’s models via API — for example, automated documentation generation, processing large volumes of content, or embedding them in tools — you’ll want to understand the token-based pricing as well:

- The model Mistral Medium 3 is priced at roughly $0.40 per 1 million input tokens and $2.00 per 1 million output tokens.

- Models such as “Small” or “Large” variants have different pricing tiers. For example, Mistral Large has been quoted at $2 input / $6 output per 1M tokens in some sources.

- Example: If you send 100,000 input tokens and generate 50,000 output tokens in a session, your cost would be (100k/1M)$0.40 = $0.04 input + (50k/1M)$2.00 = $0.10 output → $0.14 for that session.

My Own Tests & Review with Mistral

From my opinion & experience, Mistral isn’t the flashiest or the absolute best model you can get—but it’s a reliable, open-source European alternative.

From my writer’s standpoint, I found a predictable, consistent style—ideal for tasks like SEO, technical content, or structured documentation. You’ll see sentences that are clear, logically ordered, and concise. Sometimes, that can make the output feel “formatted” or “too organized.” But when you’re producing documentation, educational material, or marketing explainers, that’s a feature, not a flaw.

If your goal is fiction or narrative writing, you’ll notice that Mistral’s responses can feel a little rigid. It doesn’t play with tone or rhythm as creatively as Gemini 2.0 or Claude Opus. That’s because Mistral Medium 3.1 isn’t a creativity-optimized model—it’s built for speed, factual accuracy, and scalability.

When you ask it to reason deeply, for instance to write a nuanced argument or a creative story, it can plateau earlier than larger proprietary models. That’s expected: Mistral Medium isn’t designed to match the reasoning depth of Claude Opus 2 or GPT-4o. But for structured, utilitarian writing, it does the job efficiently and predictably.