Many AI text humanizers have emerged on the market with the promise of bypassing AI detectors (OriginalityAI, Turnitin, GPTZero…etc).

But what are their specific algorithmic strategies, and do they really achieve to avoid AI detection ? Do they make your content sound better as well?

Here, I examine how effective such humanizers are at evading detection and making human-like content based on various academic studies.

How AI Humanizers Algorithmic Strategies Work

AI humanizers operate by altering an AI-generated passage to make it statistically and stylistically resemble human writing.

But these changes can be more advanced. Most commercial humanizers apply these general algorithmic approches :

- Rule-based synonym replacement : this approach keeps the sentence structure largely the same but replaces certain words or phrases. For instance, a humanizer might turn “The cat sat on the mat” into “The feline sat on the rug” by synonym substitutions. Modern humanizers have grown more sophisticated, frequently using machine learning (ML) models. Many leverage powerful language models (like GPT-based systems or T5-based models) to rewrite text. These models consider the context of the whole sentence or paragraph and can reorganize sentence structure, not just individual words. Some humanizers even perform back-translation (translating text to another language and back) or use two-step processes (e.g. first swapping synonyms, then adjusting syntax via ML). The overarching goal of all these algorithms is to increase the text’s perplexity and randomness in a controlled way – meaning the text becomes less predictable to AI detectors while still sounding coherent.

- Breaking the AI writing structure: Humanizers can break up or merg sentences, adding conversational fillers, or reordering information to mimic a human writer’s decisions. For example, an AI might produce: “Albert Einstein, born in 1879 in Ulm, Germany, was a theoretical physicist renowned for developing the theory of relativity…” A humanizer could split and rephrase this into: “Albert Einstein was born in 1879 in Ulm, Germany. He happened to be a theoretical physicist who developed the theory of relativity…” – a style that sounds less like a Wikipedia entry and more like a human narrative.

- Symplifying the style : some tools rewrite text at a much lower reading level. For example, they might turn complex, formal prose into something a grade-school student might write. This can increase the “human-likeness” in terms of variability, but it also dilutes the original tone or nuance. In general, purely rule-based humanizers (simple synonym spinners) tend to stay very close to the original text structure. This means they are less likely to break meaning, but they might not fool advanced detectors if the overall phrasing remains recognizable. ML-based humanizers (using large language models), on the other hand, reword more flexibly – they might rearrange or drop some details for the sake of more natural flow.

- Keeping the meaning: Advanced methods also include constraints to ensure the paraphrased text doesn’t accidentally negate a statement or alter key facts. For example, the humanization algorithm might enforce that if the original text says “Einstein received the Nobel Prize in 1921,” the rewritten text doesn’t inadvertently say “Einstein did not receive the Nobel Prize…” due to a poor word choice. Techniques like part-of-speech matching (replacing a noun with a noun, verb with a verb) and semantic similarity checks (using sentence encoders to verify the new sentence still matches the original’s meaning) are used to maintain fidelity

The Different Types of AI Humanizers

Not all AI humanizers are built alike – they range from simple scripts to complex AI-driven systems. Broadly, we can classify humanizers into a few categories based on their approach:

1. Rule-Based Humanizers:

These are the simplest form of humanizers, relying on predefined rules and dictionaries rather than learned language models. Often termed “article spinners” or rewriting tools, they operate by scanning the text and substituting words/phrases with synonyms, or applying basic grammar transformations.

For example, a rule-based tool might be programmed to these modifications outlined by the AI detection benchmark RAID :

- Alternative Spelling: Use British spelling

- Article Deletion: Delete (‘the’, ‘a’, ‘an’)

- Add Paragraph: Put \n\n between sentences

- Upper-Lower: Swap the case of words

- Zero-Width Space: Insert the zero-width space U+200B every other character

- Whitespace: Add spaces between characters

- Homoglyph: Swap characters for alternatives that look similar, e.g. e → e (U+0435)

- Number: Randomly shuffle digits of numbers

- Misspelling: Insert common misspellings

- Paraphrase: Paraphrase with a fine-tuned LLM

- Synonym: Swap tokens with highly similar candidate tokens

Limitations: Pure rule-based humanizers often produce stiff or error-prone results. They don’t understand context, so they might choose a wrong synonym (e.g., replacing “patient” (noun) with “forbearing”) or end up with grammatical errors.

On the upside, because they don’t generate totally new sentences, they stay grounded to the original text structure – meaning the core information and flow remain recognizable. This can preserve meaning well, but also means detectors that analyze structural similarity or repeated patterns might catch them.

2. Machine Learning-Driven Humanizers:

These humanizers leverage AI models (often large language models) to perform the rewriting. Instead of following hard-coded rules, they have learned how to paraphrase by training on large text corpora. One approach is using sequence-to-sequence models (like a T5 transformer) fine-tuned specifically for paraphrasing. Such a model takes the whole input sentence or paragraph and re-generates it in different words, guided by learned patterns of human text.

For instance, QuillBot – a popular AI paraphrasing tool – uses AI to reformulate sentences while aiming to keep the meaning. ML-driven humanizers can do deeper rewriting: changing sentence structures, splitting or merging sentences, and selecting synonyms based on context (so they’re less likely to choose an inappropriate synonym than rule-based tools).

Some ML tools can also adjust the level of formality or complexity of the text. A study by Google researchers found that a T5-based paraphraser was able to rewrite text in a way that preserved meaning but significantly evaded detection algorithms – a testament to how powerful ML-driven rewriters can be. Many of the “AI humanizer” web services that emerged (e.g., Undetectable.ai, HIX Bypass, StealthWriter, WriteHuman.ai, etc.) are suspected to use large language models under the hood. In fact, some were found to literally be GPT-3.5 or GPT-4 with a special prompt: researchers jailbroke one such tool and it revealed instructions like “respond in a conversational tone” – essentially it was an LLM told to rewrite text more human-like.

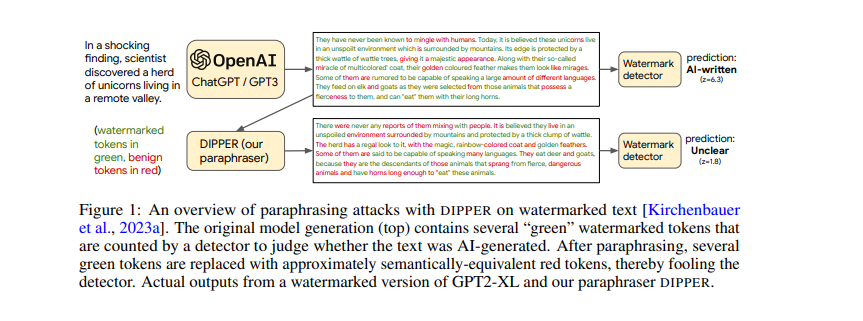

Another ML strategy is discriminator-guided rewriting. For example, an ML humanizer might use an internal AI detector to guide its output – iteratively adjusting the text until the detector model judges it as human. One research tool, DIPPER, explicitly optimizes paraphrasing to “trick” detectors universally. These models learn to make changes that specifically target the weaknesses of detection algorithms (e.g., raising perplexity and burstiness). The ML-driven category also includes tools integrated into writing assistants like Grammarly’s rephrasing suggestions or Wordtune, which use AI to offer alternate phrasings – though those are aimed at improving style/clarity, not explicitly at detector evasion, they operate on similar principles.

3. Hybrid Humanizers (NLP + ML):

Many practical humanizers combine rule-based tactics with AI generation to get the best of both worlds. A hybrid approach might start with a round of deterministic edits (for example, inserting a few slang terms, or ensuring certain keywords are replaced for SEO reasons), and then use an AI model to smooth the text out. Conversely, some pipelines use an AI model to generate a paraphrase, then apply rules to fix certain issues (like ensuring a specific term remains in the text or adding a particular format).

One common hybrid technique is back-translation, which implicitly uses ML (machine translation algorithms) and then some cleanup rules. For example, you could translate an English AI-generated article into French and then back into English. The final English text will convey the same idea but phrased differently (since translation choices act as a paraphrase). This approach was even used as an adversarial tactic in research – translate AI text to another language and back, to shake off AI-style phrasing.

Hybrid humanizers also include workflows where multiple tools are chained: e.g., using a synonym replacer first, then a grammar checker to fix any broken sentences, and finally a style transfer model to add a human-like tone. By combining methods, hybrid systems try to cover the weaknesses of any single approach. However, they can be more complex and might risk over-correcting (too many edits can accidentally remove substance or introduce new errors).

Do AI Humanizers Really Bypass AI Detectors?

The main motivation behind humanizers is to bypass AI AI detectors (like GPTZero, Turnitin’s AI checker, OpenAI’s former Text Classifier, and others). But is it the case ?

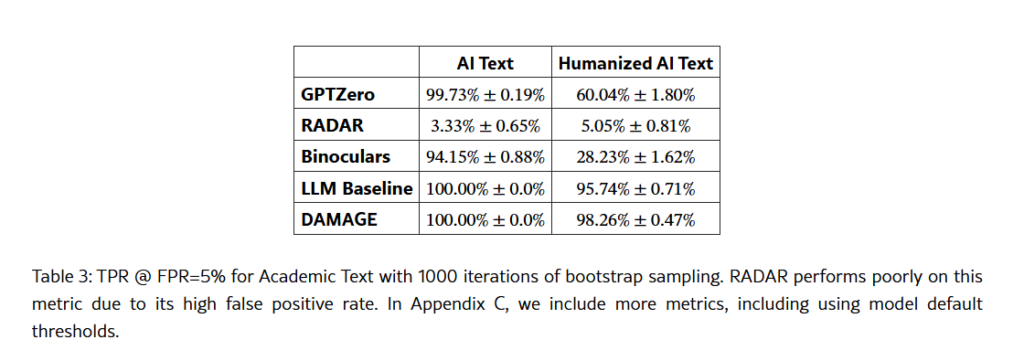

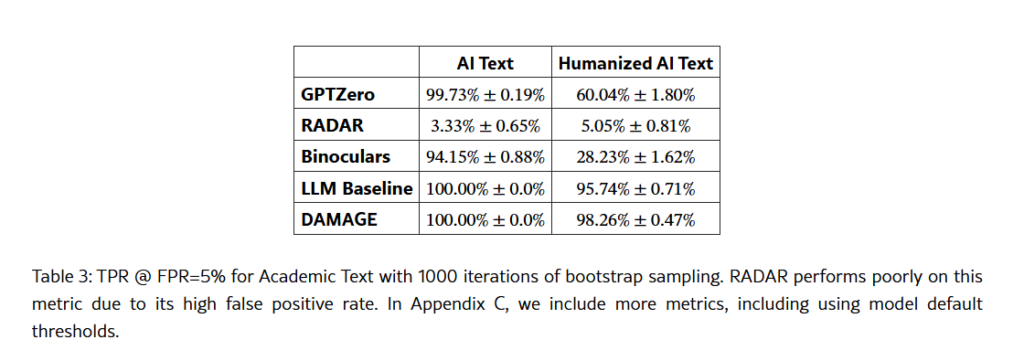

The Pangram Labs 2025 DAMAGE study examined 19 humanizers and confirmed that “many existing AI detectors fail to detect humanized text.” They benchmarked several detectors on AI-generated essays before and after humanization: the true positive rate of detectors like GPTZero and others fell significantly on the “humanized” versions. For example, one robust detector’s accuracy dropped from ~87% on raw AI text to nearly 5% after paraphrasing with a strong humanizer. In practical terms, text that originally triggered an “AI-written” alarm often came back as “likely human” after using a good humanizer.

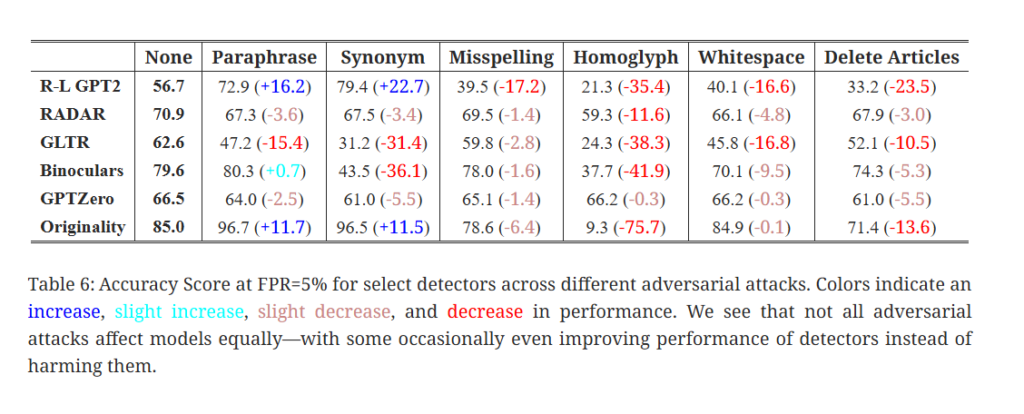

The impact of these AI humanizers vary depending on the AI Detectors. GPTZero was noted to “resist simple evasion tricks” like character substitutions, but it is not immune to full rephrasing. When researchers tested Turnitin against paraphrased AI text, they found it susceptible to paraphrasing attacks, losing a substantial amount of accuracy. For Originality AI, it handles paraphrased AI content especially well but it struggled with the homoglyph attack (replacing characters with look-alikes) and zero-width characters (invisible Unicode inserted into text).

But the effectiveness of AI humanizers might change in the future. Newer detection models attempt to look beyond just surface-level word changes.

For instance, some detectors use semantic consistency checks – effectively asking, “Does this text sound like it was written by someone who understood it?” One approach is to feed the text into a large model and have it judge if the style seems artificial. Another is using dual-model perplexity (as in the Binoculars method) – if two different language models both find the text unusually easy (low perplexity), it might still be AI even if words were changed.

Detectors are also exploring watermarking: OpenAI and others have tested embedding hidden patterns in AI text that detectors can scan for. However, humanization by paraphrasing can destroy these watermarks. In a test, watermarked AI text had a high detection rate (over 87%), but after paraphrasing with a tool (DIPPER), the watermark detection fell to ~5%. This shows paraphrasing even breaks algorithmic watermarks.

Another method detectors use is metadata and trace analysis – the research detector called DAMAGE leverage retrieval from all the previous LLM ouput to detect AI-generate writing, and it remained robust even to unseen humanizers by learning the generalizable features of humanized text. Their approach treats humanized AI text as just another category to learn, rather than assuming it looks exactly like human text. Early results are promising: the detector achieved high recall on texts altered by high-quality humanizers while still keeping false positives low.

This indicates that as humanizers improve, detectors will likely lean more on deep learning (rather than static measures like perplexity) to identify subtle tells. For example, a detector might notice that, even after humanization, the text has just slightly more uniform sentence rhythm than a typical human piece, or that certain odd phrasings (which a human wouldn’t normally use) appear. Some humanized texts might have unnatural collocations (words that don’t usually go together) because the synonym replacement was context-blind – detectors can flag those. In essence, detectors are now looking for second-order AI artifacts: weird quirks introduced by the act of paraphrasing AI content.

AI Humanizers’ Impact on Content Quality

While it might achieve detection evasion, an another important question is: what does using an AI humanizer do to the quality of the content?

Content marketers have observed that writing reformulated by LLMs writing can perform better with audiences: a research report noted businesses saw a improvement in engagement on their AI-generated content. LLMs often aim to inject a bit of style or voice that pure AI text lacks – for instance, adding rhetorical questions to engage the reader, or using an informal tone if appropriate. All these can increase user engagement and time spent reading.

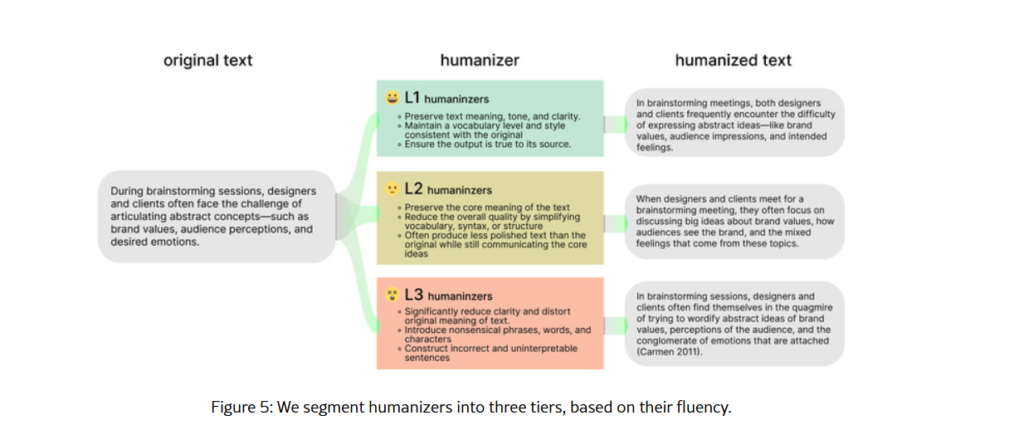

However, the effect on quality might not apply as much on AI humanizers as on LLMs. The Pangram Labs audit revealed that all humanizers tend to degrade the quality of text to some extent – the question is just how much. In their fluency test (having GPT-4 judge which of two versions is more fluent/coherent), the original AI text often won out over the humanized text, especially for lower-tier tools. Even the best humanizers only improved fluency in ~26% of cases (meaning 74% of the time, the original was equal or better).

Here are the specific impacts on readability and coherence:

- Introduction of Nonsense: Lower-quality humanizers sometimes insert nonsensical or irrelevant content into the text. A qualitative review noted common issues like hallucinated academic citations, random in-line comments, or garbled phrases that were not in the original. For example, one tool inexplicably added a fake citation “(Westwood, 2013)” in the middle of a sentence about public affairs. Others appended odd phrases or sequences of question marks (e.g. “(??????)”) in otherwise normal sentences. Such additions don’t contribute meaning and can confuse readers, but they do alter the text enough to throw off some detectors.

- Simplified or Broken Structure: Some humanizers distort sentence structure. A few tools break up complex sentences into a series of short, choppy clauses, making the output read unnaturally. This can hurt the flow of the text. In one evaluation, a “ghost” paraphraser split every sentence into single-clause statements, degrading readability. Conversely, other humanizers leave the sentence structure largely intact and only swap words with synonyms. These synonym-based rewriters maintain coherence better but may still produce awkward phrasing if a replacement word isn’t quite right in context.

- Shifts in Tone or Level: A rewritten passage’s tone and vocabulary level can change depending on the tool. Some humanizers output text in a consistently academic and formal tone (even if the original was casual), while others dumb down the language to an elementary school level. For instance, one audit found certain tools “generally downgrade” a piece from a university-level style to a middle-school level, simplifying vocabulary and sentence complexity. On the other hand, the best humanizers try to mimic the original writing level and tone; when a tool is powered by a large LLM, it often preserves the overall style of the input (unless instructed otherwise). Consistency in voice can be crucial for readability, so an abrupt shift up or down in writing level can make the text feel disjointed or suspicious to a human reader (even if it fools an AI detector).

- Redundancy and Coherence Issues: Redundancy can occur if the humanizer, for example, splits one idea into two sentences that repeat part of the context. This kind of redundancy might make the text less elegant. Coherence can also suffer if the tool isn’t smart enough to maintain logical flow. Cases were observed where a humanizer re-ordered sentences in a way that made the narrative less logical or dropped a necessary transition.

- Factual Consistency: A critical quality aspect is whether the facts stay true. A big risk with automated rewriting is the potential for meaning drift. If a humanizer replaces words without full context, it might change a factual detail. An extreme example from a study: the sentence “I like that guy” was adversarially changed to “I hate that guy” just by a poor choice of synonym, completely flipping sentiment.

- Grammar and Fluency: Quality also varies in terms of grammar and fluency. Top-tier humanizers produce fluent, grammatically sound text almost on par with a human edit. Lower-tier ones tend to introduce errors – e.g. missing words, wrong verb tenses, punctuation mistakes, or oddly structured sentences. In a comparative summary, researchers noted some tools output text with “no typos or grammar errors,” whereas others consistently had at least one grammar/punctuation issue per paragraph Extreme cases resulted in “uninterpretable” sentences or text that reads like a rough draft full of errors.

Despite these issues, not all humanized text is low-quality. The DAMAGE analysis segmented these tools into tiers (from best “L1” to worst “L3”) based on how faithfully and fluently they rephrase text :

- High-Quality Humanizers (Tier L1): These are often LLM-based rewriters that make minimal necessary changes and preserve the original meaning and style well. They might restructure a sentence or swap in synonyms, but they do so judiciously. For instance, Quillbot (a popular paraphrasing tool) was found to produce “flowery text” that is still fluent and readable, with a high-level vocabulary that stays mostly accurate (only slight imprecision). Another tool, HumanizeAI.pro, was noted for “good grammar, advanced vocabulary, and good punctuation”, essentially outputting high-quality text that reads naturally.

- Moderate-Quality Humanizers (Tier L2): These produce text that conveys the same message as the original and usually avoids blatant errors, but they may simplify phrasing or alter the writing level. They often rely on straightforward synonym replacement or rule-based changes. For example, AIHumanizer.com was reported to “lower the vocabulary level” of text from college-level to something like middle-school, and it introduced some punctuation mistakes. Another tool, BypassGPT, essentially swapped words with dictionary equivalents; it made no obvious typos and kept each sentence’s meaning intact, but occasionally the chosen synonyms were slightly off or awkward in context.

- Low-Quality Humanizers (Tier L3): Tools in this category often harm the text’s clarity. They might break sentences in weird ways, insert irrelevant bits, or have poor grammar. An example is Undetectable AI – despite its name, the audit found it produced “poorly written text at an elementary school level”, even introducing typos in the process. Likewise, WriteHuman.ai’s output was described as elementary-level writing with occasional incomplete sentences. At the very bottom, some humanizers (e.g. Humbot AI) made sentences “uninterpretable” with random additions that rendered the text nearly unreadable.

Surprisingingly, based on my own ranking, the lowest quality humanizers are also the one that bypass the most effectively AI detectors. And conversely, the highest quality humanizers are the one least liquely to bypass AI detectors. So we are here facing a dilemma!

Do Humanizers Improve SEO?

From an SEO (Search Engine Optimization) perspective, humanizers can help by avoiding duplicate content detection and maybe improving readability (which can impact user experience metrics). Since Google has stated it doesn’t outright ban AI-generated text but focuses on content quality, a humanized article that reads more naturally and provides value could rank better than a raw AI article that feels spammy.

Humanizers often aim to eliminate the “robotic” tone that might turn off readers or search quality raters. Additionally, they might introduce synonyms for keywords that can avoid keyword stuffing issues and diversify the vocabulary, potentially capturing long-tail keywords.

However, there are risks: if the humanizer output is low quality (rambling or incoherent), it could hurt SEO – high bounce rates and poor user engagement signals can result if readers find the text awkward or unhelpful.

Humanization by itself cannot add real expertise or experience; it only changes wording. So while it might avoid detection by any AI-content filters, it doesn’t guarantee the content will meet Google’s quality standards.

How to Truly Humanize AI-Generated Content

As you can see, we’re facing a dilemma : using a high quality humanizers makes your content readable but also less likely to bypass AI detectors. Using low quality humanizers makes it more likely you avoid AI detection but with readibility and style loss.

So how do you humanize both for detectors and readers ?

I’ve been and here’s my structured approach to human-like AI content :

1. Choose the Right AI Humanizer

Not all AI humanizers are created equal. Some are better at producing natural-sounding text, while others focus on bypassing AI detectors. If your goal is to simply improve readability and remove robotic phrasing, tools like QuillBot or Wordtune are solid choices. However, these rephrase text without necessarily changing its structure enough to evade AI detection.

If bypassing AI detectors is your priority, you’ll need tools specifically designed for this purpose. Some of the most effective AI humanizers I found for bypassing detection include:

- Undetectable AI

- StealthWriter

- Twifly AI

These tools alter sentence structure, word order, and even paragraph composition to avoid common detection patterns. However, they decrease the quality of your output. So it shouldn’t the last step on your writing process.

2. Use Step-by-Step Detailed Prompts

AI models default to a neutral and predictable writing style. To combat this, you need to train your AI model to mimic your unique writing style by providing precise, structured instructions.

Instead of using a vague prompt like:

“Write an article about digital marketing.”

Use a structured, detailed prompt like this:

“Whenever you write a text, strictly follow these guidelines:

- Use an engaging and conversational tone with varied sentence structures.

- Avoid common AI-generated phrases and clichés.

- Introduce occasional rhetorical questions and informal transitions.

- Use first-person insights where appropriate.

- [any other guideline]”

Even better, train the AI to analyze your writing style by feeding it samples. Try this:

“Here’s a writing sample to determine my style. Only reply ‘ok’ if the extract is clear. Here’s the extract: [Insert writing sample].”

This forces the AI to adapt to your tone, phrasing, and sentence structure, making the output feel more natural and original.

3. Infuse Your Own Ideas and Knowledge

AI-generated content feels generic because it pulls from widely available internet sources. The best way to make your content unique and original is to insert your own knowledge, ideas, and personal experiences.

So you better feed your LLM with your own outline or ideas first.

Try prompting AI like this:

“Generate an outline for an article about [insert topic] using these key ideas: [Insert your main ideas or bullet points].”

This method ensures AI content is structured around your specific insights, so it’s less predictable and adds more information per token.

4. Humanize & Edit Manually

Even the best AI humanizers can’t fully replicate human intuition. Manual editing remains essential for fine-tuning AI-generated text.

- Read the text aloud – If it sounds unnatural when spoken, it needs revising.

- Fix awkward transitions – AI struggles with smooth flow between ideas.

- Add personal voice – First-person statements, humor, or rhetorical questions improve authenticity.

- Diversify sentence structure and formatting : AI models tend to produce medium-length sentences consistently. To disrupt this pattern, mix short, punchy sentences with longer, detailed ones. Alternate also between bullet points, subsections, and well-space paragraphs.

- Remove AI-Specific Repetitions and Redundancies : To make AI-generated content feel more human, get straight to the point. Remove the last sections generated who are generally only summarized the last points. Minimize the sentences that are reiterating the same points over and over.

- Get rid of “bulltshit” expressions :AI models frequently overuse predictable phrases. These common expressions are red flags for AI detectors and make content feel robotic. Some AI-generated clichés to avoid: “In today’s world…”, “In a time where…”, “Fast-paced environment”, “Delve into”, “Not only this, but also that”, “It does X, Y, but also Z” (tricolons)

Example: AI-generated: “Social media marketing is essential for brand growth because it helps companies reach a larger audience and drive more engagement.”

Humanized: “Social media marketing matters. It helps brands connect, engage, and grow. The best part? You reach a massive audience effortlessly.”

So there it is, with these small and deliberate changes, you’ll make writing feel natural and less mechanic – And also bypass AI detectors!