ChatGPT, Gemini, Claude — these three names dominate every conversation about AI writing today.

But that raises the question: which one should you actually use?

Here we’ll compare ChatGPT (GPT-5), Gemini 2.5 Pro, and Claude Opus 4.1 based on their specifications and actual output quality (through my own tests & samples).

| Model | Strengths | Weaknesses | Best For | Pricing (API) |

|---|---|---|---|---|

| ChatGPT (GPT-5) |

• Strong structure & outlines • Long outputs (up to 128K) • Rich workspace: files, research, canvas, voice |

• Creativity can feel flat • Context smaller than Gemini (400K) |

Structured essays, reports, SEO drafts where sectioning matters | $1.25 input / $10 output (per 1M tokens) |

| Gemini 2.5 Pro |

• Huge context window (1M) • Detailed, step-by-step outputs • Strong multimodality (text, image, video) |

• Canvas/editor less polished • Output length shorter than GPT-5 |

SEO, research-heavy tasks, digesting large PDFs/briefs | Pricing varies by channel (AI Studio/Vertex) |

| Claude Opus 4.1 |

• Natural, lyrical prose • Style/clarity controls in UI • Great for creative writing |

• Smaller context (200K) • Fewer UX features • Premium price point |

Fiction, poetry, narrative writing, tone-sensitive drafts | $15 input / $75 output (per 1M tokens) |

| Verdict: Use Gemini for big documents, Claude for style, and GPT-5 for structured nonfiction. | ||||

Technical Specs Comparison

By only looking at their technical capabilities, there are really some big differences between the models :

Context window (how much research you can stuff in)

- Gemini 2.5 Pro — ~1,000,000 tokens. Google confirms a 1M context window today, with “2M coming soon.” If you write from giant briefs, PDF packs, or story bibles, this is the standout.

- ChatGPT (GPT-5) — 400,000 tokens. Big enough for most non-fiction packets and brand guides, but half of Gemini’s ceiling.

- Claude Opus 4.1 — 200,000 tokens. Fine for lean workflows; Anthropic pushed Sonnet 4 to 1M, but Opus 4.1’s published spec is 200K. If you’re routinely past 200K, validate with your own prompts.

Maximal output (how long a single draft can be)

- GPT-5 — up to ~128K output tokens per completion. Handy for long, sectioned articles, whitepapers, or book chapter passes without chunking.

- Gemini and Claude don’t publish larger one-shot output than that today; in practice you’ll iterate or chain for book-length work.

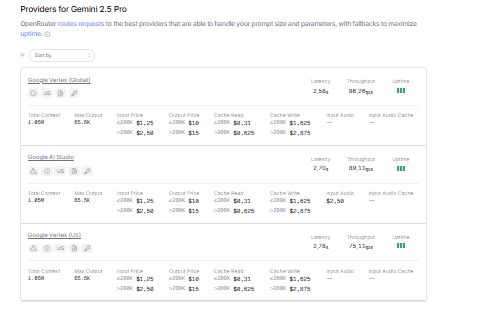

Price (API, per 1M tokens)

- GPT-5: $1.25 in / $10 out. Strong if your prompts are long (cheap input).

- Gemini 2.5 Pro: exact same pricing.

- Claude Opus 4.1: $15 in / $75 out. Premium, but predictable.

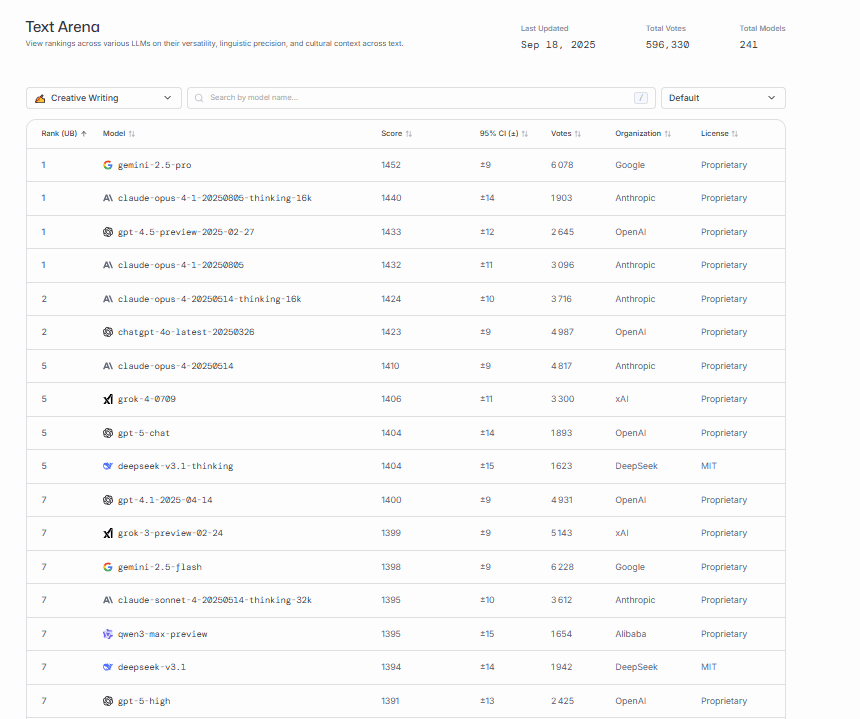

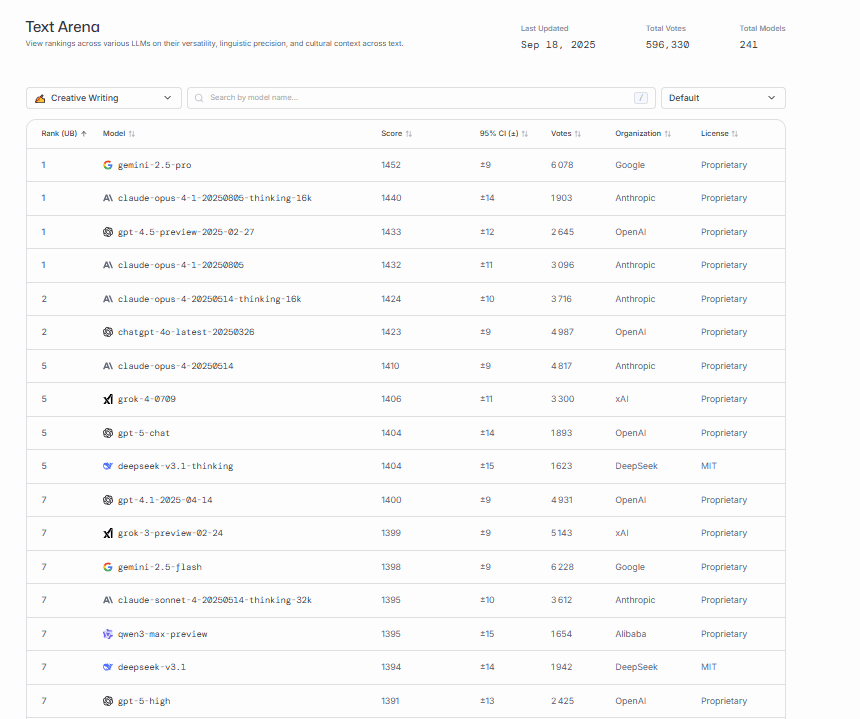

Leaderboard Comparison

Here’s the current leaderboard ranking of the models on the Chatbot Arena :

The scoreboard (as of late September 2025)

- Gemini 2.5 Pro sits at the top of the Text Arena. It also point to #1 in the creative writing ranking. It is definitely a top-tier writer with strong reasoning.

- Claude Opus 4.1 clusters with Gemini at the top in the creative writing ranking.

- GPT-5 ranks high on the Text Arena list but lower on the creative writing ranking. In this list, it actually ranks lower than its former variants : o3, 4o and 4,5.

Why GPT-5 can “feel” less stylish

This gap between raw intelligence and style comes from the way GPT-5 was trained. It leaned heavily on feedback from expert editors and reviewers. The upside: it learned what polished, “good” writing looks like. The downside: sometimes it overshoots. Instead of natural flow, you get text that feels too artistic or overly formal.

Output Quality Comparison

Here’s how I experienced each model when I tested them side by side, writing task by writing task.

SEO and marketing copy

When I asked for an SEO-style article with a step-by-step method (coffee roasting), Gemini 2.5 Pro stood out immediately. The draft was concrete, well-structured, and practical. It gave me usable steps in a way that felt more like a proper how-to guide than filler text.

GPT-5 did okay, but I had mixed feelings. Sometimes it reasons through the task and produces sharp outlines; other times it skips that reasoning and the result feels flatter. In my test it didn’t reason, so the text lacked depth.

Claude Opus 4.1 was clear but a bit thinner. It didn’t give me as much detail as Gemini, so while readable, it wasn’t the one I’d pick for SEO-heavy content.

Fiction and short stories

For a first-person magical-realism short story, my favorite was Gemini 2.5 Pro. Its opening felt alive. It used symbolism I hadn’t expected, like turning pollution into ghostly butterflies, and that image stuck with me.

GPT-5 leaned into a mysterious sound motif. That part was intriguing, but the piece left me more confused than satisfied. I couldn’t tell if the vagueness was intentional or just a side effect of how it generates.

Claude Opus 4.1 took a different approach, building the story around “water memory.” It was coherent and more grounded than GPT-5’s draft. Still, for me it didn’t have the same spark as Gemini’s scene.

Poetry (the sonnet test)

When I asked for a Shakespearean sonnet on digital memory and human forgetting, Claude was the clear winner in my eyes. The rhyme scheme was solid, the rhythm worked, and the closing couplet landed hard: “To truly live must forget our pain.” That line felt crafted.

Gemini’s version was decent but didn’t hit me as strongly. GPT-5’s was technically fine, but sometimes the phrasing leaned too ornate, almost trying too hard.

Essays and nonfiction

For an argumentative essay on universal basic income, GPT-5 pulled ahead. It gave me the best structure—subsections, transitions, headings. It’s the kind of draft I could quickly edit into a clean article.

Gemini had depth. It reasoned through different angles well, but it didn’t give me the same clean structure that GPT-5 offered.

Claude was balanced and clear but less organized. It read smoothly, though I’d need to add headings and a stronger outline myself.

Feature Comparison

The chatbot interface and features also matter. Here’s how they compare :

ChatGPT (GPT-5): the most complete writing workspace

- Files in, almost anything. You can drop long PDFs, images, folders, even zipped packs. It parses a wide range of formats without fuss.

- Research that feels built-in. The Research/Browse flow is detailed and practical. For small tasks and source checks, you get crisp, usable pulls.

- Canvas that actually helps. Inline suggestions, reading-level controls, quick emoji/format buttons, and “apply this edit” actions cut friction. You write, click, move on.

- Images + edits. Image generation and in-place editing are consistent. Not always “best-in-class” anymore, but still reliable.

- Voice & live. Speak to it, or let it read back. Great when you want to hear cadence.

- Connectors. Plenty of integrations for custom flows. Good for teams.

Gemini 2.5 Pro: long-context uploads, strong multimodality, solid research

- Huge inputs, fewer headaches. The 1M context shows in daily use: you can pour in long PDFs, mixed notes, and screenshots and still ask nuanced questions.

- Research that keeps up. Results are structured and concrete. On guides and how-to tasks, it felt especially strong.

- Images + video. You can generate media and use Picture mode; handy when your idea needs a visual seed.

- Canvas: fine, not best. You get an editor and quick tweaks, but it’s less polished than ChatGPT’s canvas.

- Voice input. Works, though the overall writing loop still leans on typing.

- Format caveats. You can’t upload every file type I tried; a few formats were picky.

Claude Opus 4.1: clean editor, fewer features, better style control

- Editor that stays out of your way. Simple, focused. You write without distractions.

- Style knobs in the UI. You can adjust tone/clarity directly without re-prompting a meta style guide. That’s unique and useful.

- Web + research added. It’s there now, but not as rich as ChatGPT’s flow.

- Usage limits. You can hit caps quicker than on the others, especially in the consumer chat. This pushes you toward API or enterprise deployments.

- API-first feeling. Great for teams plugging Claude into a stack; lighter for solo chat-only work.

- Net impact: If you value voice feel and want fast style adjustments, it’s lovely. For heavy uploads or long, tool-rich sessions, it can feel constrained.

My Honest Opinion

- Gemini is the best if your workflow begins with huge inputs. Its million-token context window makes it ideal for SEO projects, research-heavy briefs, or long source documents. It delivers detail and procedural clarity that stand out in practical writing.

- Claude Opus shines when voice and cadence matter most. For fiction, poetry, or lyrical openings, its prose feels natural and human. Style adjustments built into the interface also make it easy to nudge tone without rewriting prompts.

- ChatGPT gives you the strongest structure and workspace. Its long single-output capacity and rich toolset (files, research, canvas editing) make it the most efficient for essays, reports, and other structured nonfiction..

Based on this comparaison, test them out by yourself and make your choice accordingly !