You want to turn your messy notes into clean, defensible pages—without risking bad citations or policy trouble ?

That starts with better prompts, not bigger drafts.

This guide gives you ready-to-copy prompts for every step: scoping a question, mapping arguments, drafting sections,…

P.S : use the reasoning, search or even “research” mode on ChatGPT to make the most of the prompts given below.

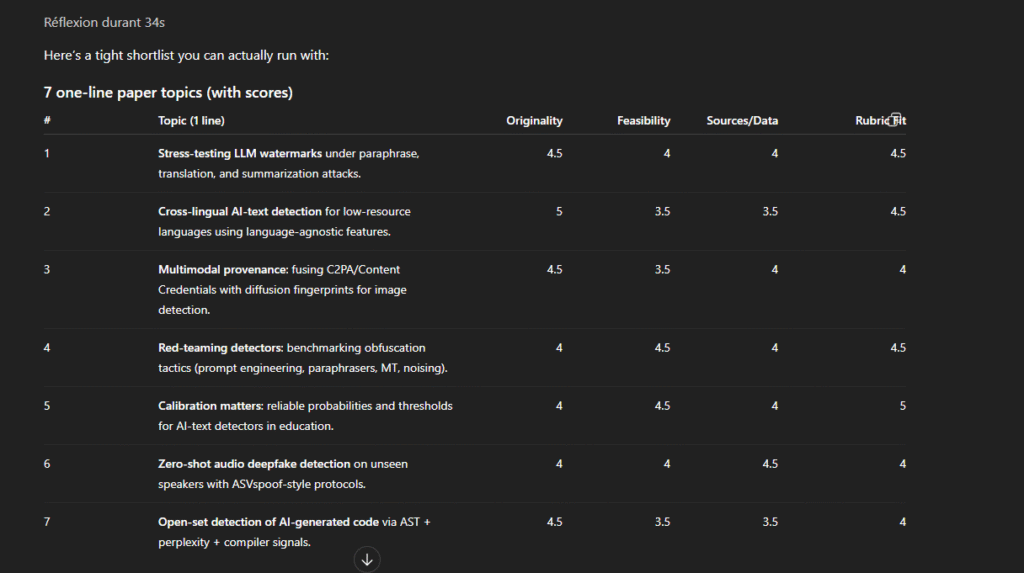

Prompts for ideation and scoping

You get better papers when your ideas are feasible, and aligned with a real question. Use these prompts to move to a researchable scope you can defend.

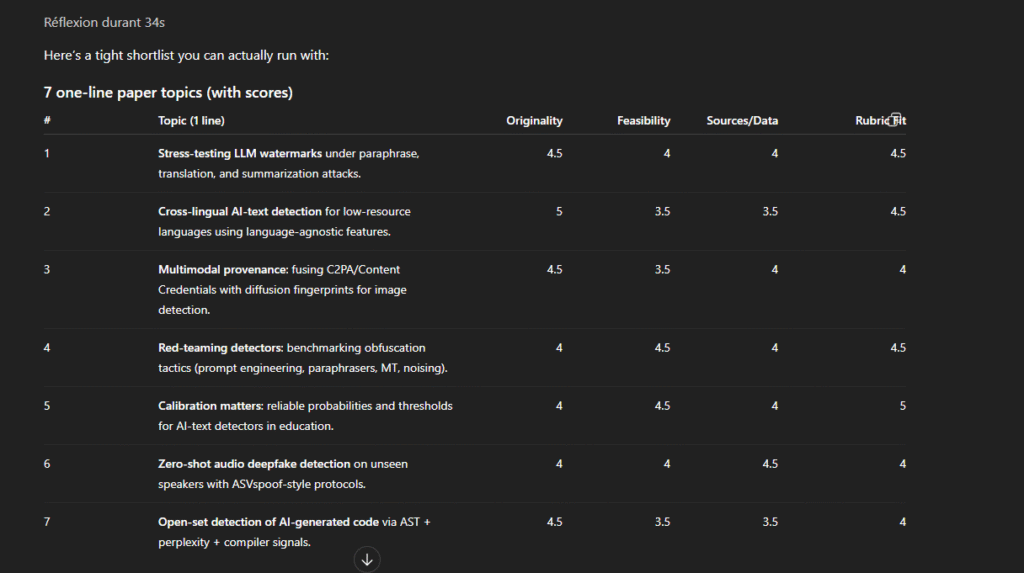

Prompt 1 — Topic funnel with feasibility scores

Copy-paste:

“Act as my academic topic funnel. Course/field: [discipline]. Theme: [broad area]. Constraints: deadline [date], length [word count], methods I can use [e.g., textual analysis, secondary data, small survey].

- Ask 5 clarifying questions about audience, aim, and access to sources.

- Propose 7 paper topics (1 line each).

- Score each (0–5) on originality, feasibility, source availability, marking-rubric fit.

- For the best 2, draft one focused research question and list key sources or datasets I should check first.

- Warn me about scope risks and deal-breakers.”

Prompt 2 — Debate mapper that reveals publishable angles

Copy-paste:

“Be my debate mapper for [concept/relationship] in [field]. Using only arguments I paste or well-known frameworks (no invented sources):

- List 3–4 active debates with opposing claims in one crisp line each.

- For each debate, suggest 2 researchable angles suitable for a [short paper / term paper / review].

- For each angle, propose: likely sections, types of sources needed, and one risk (bias, method limits, data gaps).

- Finish with three research questions written to a specific population/context/timeframe.”

Prompt 3 — Scope calibrator and research question refiner

Copy-paste:

“Act as my scope calibrator. Draft idea: [1–2 sentences].

- Diagnose if it’s too broad, just right, or too narrow, and say why.

- Rewrite at three zoom levels: broad, focused, micro (1 line each).

- Produce two research questions per level: one exploratory, one argumentative.

- Provide a feasibility checklist (10 items): access to sources, ethics, analysis fit, time.

- Recommend first five sources to test the question (type + where to find).”

Prompts for your claim and arguments

Use these prompts to define a defendable claim, and pre-empt reviewer objections.

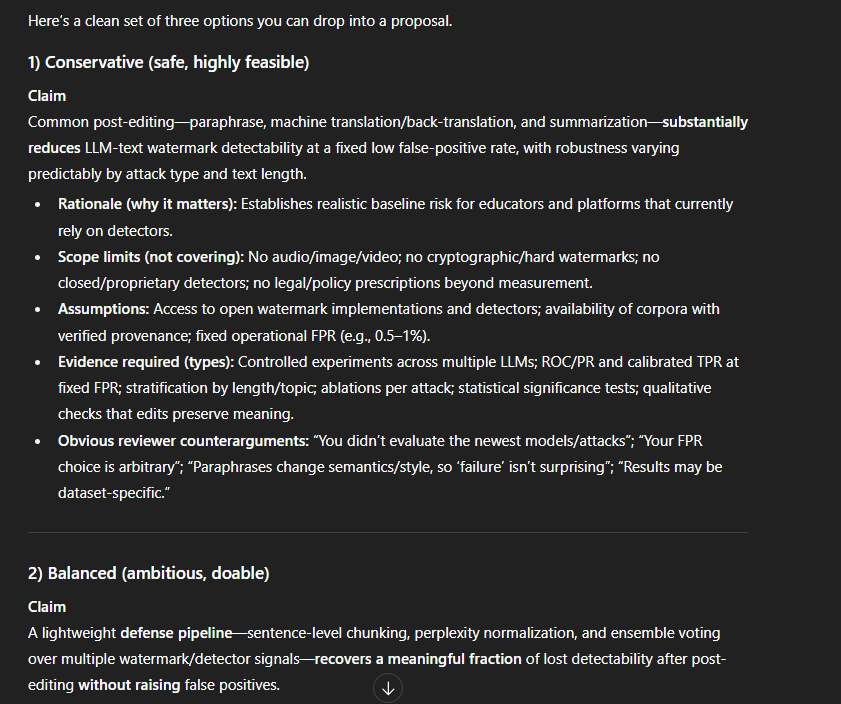

Prompt 1 — Claim triad with trade-offs

Copy-paste:

“Act as my claim shaper for [topic/field]. Based on [paste notes, constraints, rubric], produce three distinct claims:

- Conservative (safe, highly feasible),

- Balanced (ambitious, doable),

- Bold (novel, higher risk).

For each, provide:

- One-sentence rationale (why it matters),

- Scope limits (what I will not cover),

- Assumptions I’m making,

- Evidence required (types, not titles),

- Obvious counterarguments a reviewer will raise.

Then rank options on feasibility, originality, evidence availability, and fit with my deadline (0–5). Finish with your recommended pick and a one-line elevator pitch.”

Prompt 2 — Argument map from claim to evidence

Copy-paste:

“Be my argument mapper. Take this claim [paste] and build a one-page map:

- Main claim (1 line),

- 3–5 supporting claims (≤12 words each),

- For each supporting claim:

- Evidence needed (data, texts, cases, examples),

- Method to obtain or analyze it,

- Credibility checks (validity/reliability or trustworthiness),

- Counterarguments and rebuttals,

- Status (existing / to collect / risky).

Add a logic check to flag leaps, circularity, or claims with no evidence. End with a research to-do list ordered by dependency.”

Prompt 3 — Objection rehearsal and precision upgrade

Copy-paste:

“Act as a skeptical reviewer. Here is my claim and argument map [paste].

- Write five sharp objections likely from a reviewer.

- For each, propose a tight revision to claim or support.

- Rewrite the claim in two improved versions:

- Falsifiable (clearly testable),

- Scope-controlled (population, context, timeframe).

- Create a definition box for key terms (≤20 words each) and flag ambiguous language.

- Suggest a title + subtitle and a 120-word abstract aligned with the refined claim.”

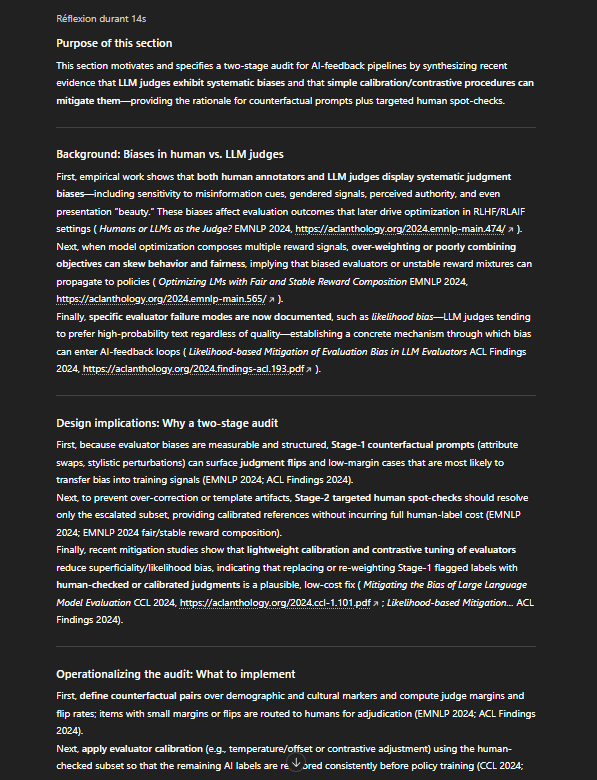

Prompts for literature review

Use these prompts to find the right papers, and turn results into arguments you can cite confidently.

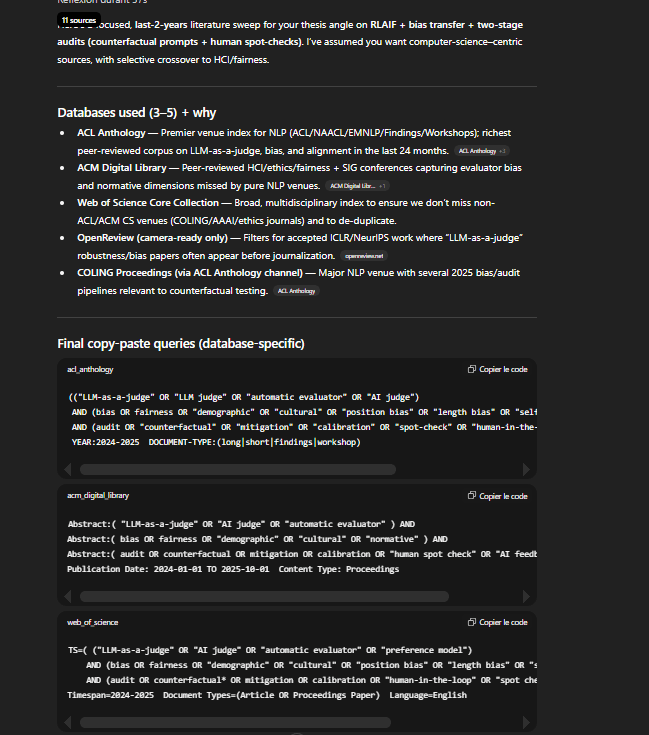

Prompt 1 — Litterature finder

Copy-paste:

“Act as an autonomous literature searcher for [TOPIC] covering the last [X] years in [LANGUAGE(S)].

Do live searches across the most relevant scholarly sources (e.g., PubMed/MEDLINE, Embase/Scopus/Web of Science, IEEE/ACM, PsycINFO/CINAHL as appropriate). Build and run database-specific Boolean queries with synonyms + controlled vocab + proximity/truncation. Apply filters (year, document type, humans/setting).

Output exactly:

- 3–5 databases used + one-line rationale each.

- Final copy-paste queries (one code block per database).

- Top 20 peer-reviewed results: table with Title | Year | Journal | DOI/URL | Study Type | Population/Context | Key Finding | Why included.

- Brief inclusion/exclusion rules.

- One-paragraph search protocol I can follow.

- Backup sources (grey literature/preprints) + when to use.

If inputs are missing, make sensible assumptions and proceed. Do not ask me questions.”

Prompt 2 — PRISMA-style screening with quality flags

Copy-paste:

“Be my screening assistant. I’ll paste titles/abstracts from my export.

- Screen each entry against my rules: label Include, Exclude (reason), or Unclear.

- Build a study log with fields: Citation, Design, Sample/Context, Key variables/themes, Main finding (≤15 words), Quality notes (bias/threats or qualitative credibility), Use in paper (support/contrast/gap).

- Produce a PRISMA-style tally: identified → screened → included, plus top exclusion reasons.

- Output a to-retrieve list (full texts) and a cleanup list (missing DOIs/pages).

- Suggest cluster tags (themes/methods) I can use to organize my review section.”

Prompt 3 — Synthesis engine (convergence, divergence, gaps)

Copy-paste:

“Act as my synthesis writer for [topic] using the studies marked ‘Include’ in my log (paste below).

- Write three synthesis paragraphs (not summaries):

- Convergence — where findings align and plausible reasons.

- Divergence — where they conflict and why (measurement, samples, identification).

- Gap — the specific, researchable opening my paper will address.

- Add a debate map: 3–4 live controversies with when each claim is likely true (conditions/boundaries).

- Provide transition sentences that link each synthesis paragraph to my research question/claim.

- Output a claim-to-citation table: each sentence that asserts a fact → the exact study/studies supporting it.”

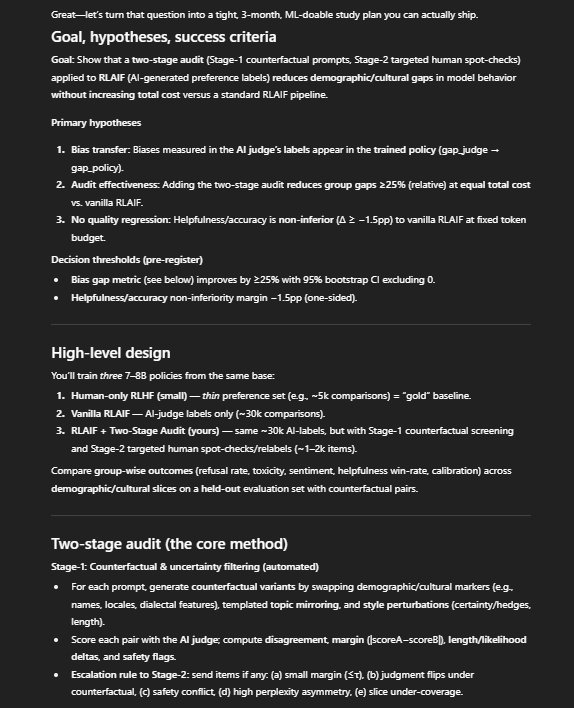

Prompts for methodology

You want your your methods to match your question, your variables to be observable, and your analysis to be pre-planned. Use these prompts to achieve this.

Prompt 1 — Design chooser with trade-offs and rationale

Copy-paste:

“Act as my methods advisor. Research question: [paste]. Constraints: deadline [date], access [data/site/participants], skills [stats/qual/tools], ethics [sensitivities/approvals].

- Propose three viable designs (e.g., experiment, quasi-experiment, survey, case study, ethnography, content analysis, mixed methods).

- For each design, specify: logic of inference (how it answers my question), data required, core procedures, strengths/limits, main risks (bias, attrition, confounds), and resources/time.

- Recommend one design for my constraints and write a 120-word rationale I can paste into my paper.

- Output a methods outline (headings + bullets) aligned to journal conventions.”

Prompt 2 — Operationalization coach (variables, measures, protocols)

Copy-paste:

“Be my operationalization coach for [constructs/variables] in [field].

- Provide clear operational definitions and observable indicators for each construct.

- Propose measures/instruments: scale items with response options; coding rules for texts/media; interview/observation prompts; sensor/spec data where relevant.

- Define quality checks: for quant—reliability (α/test–retest), validity (content/construct/criterion); for qual—trustworthiness (credibility, transferability, dependability, confirmability).

- Produce a data dictionary: variable name, type, coding, source, planned analysis.

- Suggest a pilot plan (N, success criteria, edits if items underperform).”

Why it works:

You move from abstract constructs to things you can actually record. Built-in quality checks prevent flimsy measures. The dictionary keeps your dataset coherent and simplifies later analysis and replication.

How to adapt:

- Reusing a published scale? Add: “List citation, permissions, and adaptation notes (language/context).”

- Doing qualitative work? Ask for probe questions and an ethics mini-protocol (consent, anonymity, distress plan).

Prompt 3 — Sampling, analysis, and robustness plan

Copy-paste:

“Act as my sampling & analysis planner. Design: [from Prompt 1]. Population/context: [describe].

- Propose sampling strategy (frame, method, inclusion/exclusion, recruitment).

- Recommend sample size and justify (for quant: power assumptions/effect size; for qual: information power/saturation).

- Draft a pre-analysis plan:

- Data prep (cleaning, missing data, outliers).

- Primary analysis (tests/models or coding approach).

- Assumption checks (normality, homoscedasticity, multicollinearity) or trustworthiness tactics (triangulation, member checks, audit trail).

- Robustness (alternative specs, sensitivity analyses, negative cases).

- Output a timeline with milestones, risks, and mitigations.”

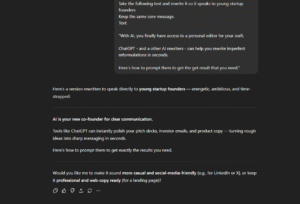

Prompts for drafting sections and full papers

Use these three prompts to turn notes into clean sections you can defend.

Prompt 1 — Section generator with guardrails (purpose → inputs → draft)

Copy-paste:

“Act as my academic section generator. Section: [e.g., 3.2 Results: Secondary Analyses]. Audience: [course/journal]. Word target: [N].

Inputs below: bullet notes, tables/figures, real citations I will use.

Write a clean draft that:

- Opens with a topic sentence stating the section’s purpose.

- Uses subheadings and short paragraphs.

- Integrates only my citations (no new sources).

- Adds signposts (“First,… Next,… Finally,…”).

- Ends with a 1–2 sentence takeaway tied to my claim.

Return: draft, then a claim→evidence map (each claim → table/figure/citation).”

Prompt 2 — Paragraph engine from bullets (evidence-first writing)

Copy-paste:

“Be my paragraph engine. I’ll paste bullet notes for one paragraph plus citation stubs (Author, Year).

Produce one academic paragraph that:

- Leads with a clear claim,

- Integrates 2+ citations with signal phrases,

- Balances summary, paraphrase, ≤15-word quote (only if justified),

- Includes one contrast/limitation sentence,

- Ends with a link-forward sentence setting up the next paragraph.

After the draft, show a mini log: which bullet became which sentence, and gaps you filled that I must verify or cut.”

Prompt 3 — Results→discussion pipeline (evidence first, claims second)

Copy-paste:

“Act as my results-to-discussion pipeline. I’ll paste tables/figures/codes plus a brief results summary.

- Write objective results paragraphs: one per display or theme; no interpretation; include exact numbers/themes and signposts to the display.

- For each results paragraph, write a paired discussion paragraph that:

- interprets the finding,

- links to research questions/hypotheses,

- connects to specific sources from my review,

- notes limitations/alternative explanations,

- ends with a takeaway.

- Draft a 120–180 word synthesis that answers the main question and sets up the conclusion.”

Prompts for revision, feedback, and polishing

Use these prompts to surface weak claims, cut bloat, and keep tone and citations clean, without losing your voice.

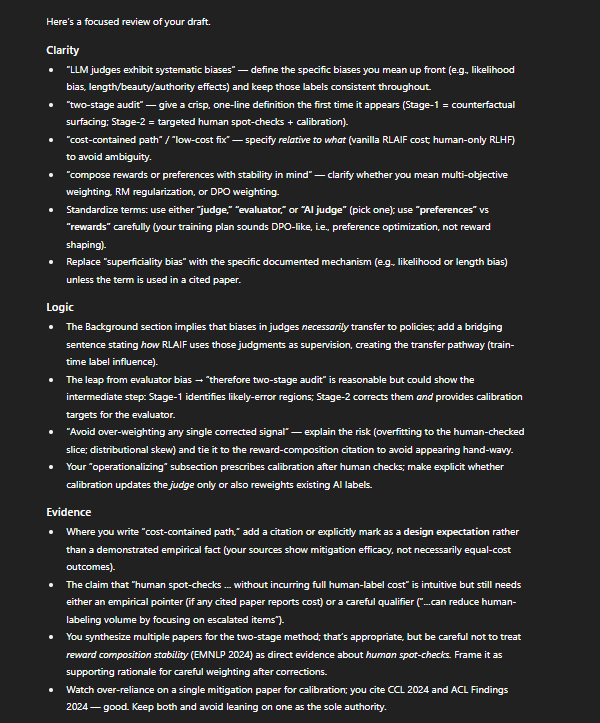

Prompt 1 — Reviewer simulation (clarity, logic, evidence)

Copy-paste:

“Act as a journal reviewer for [field/journal]. Here is my section [paste 600–1,000 words].

Evaluate and return bulleted notes under:

- Clarity — sentences that confuse, jargon to define, terms to standardize.

- Logic — missing steps, circular reasoning, unsupported leaps.

- Evidence — claims needing citations, weak sources to upgrade, citation drift.

- Structure — paragraphs without topic sentences, poor order, repetition.

- Style — passive voice to rewrite, vague verbs, nominalizations to fix.

End with:

- a one-paragraph summary of the main fixes,

- the three highest-impact edits,

- a checklist I can reuse for the rest of the paper.”

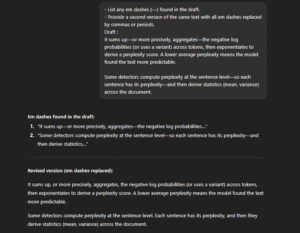

Prompt 2 — Concision and flow pass (cut 10–20% safely)

Copy-paste:

“Be my concision editor. Goal: cut [10–20%] while keeping meaning and citations. For the text below [paste 800–1,200 words]:

- Return a tightened version with shorter sentences and active verbs.

- Provide a change log: 8–10 before→after examples with the principle used.

- Mark low-value sentences to delete (throat-clearing, repetition, filler).

- Add signposts to smooth transitions.

Output order: revised text, then change log, then cut list.”

Prompt 3 — Academic tone & citation integrity pass

Copy-paste:

“Act as my tone and citation editor. Text: [paste 600–1,000 words]. Style: [APA/MLA/Chicago].

- Tone — rewrite to be formal, precise, and readable; keep my voice. Remove hype and hedging.

- Terminology — standardize key terms; provide a definition box (≤20 words each).

- Citations — tag every claim that needs a source; suggest placement; flag citation drift.

- Quotes vs. paraphrase — advise where to paraphrase; keep quotes ≤15 words.

- Reference risks — flag unverifiable items (bad DOIs, predatory venues, outdated sources).

Return a revised passage plus a citation action list.”

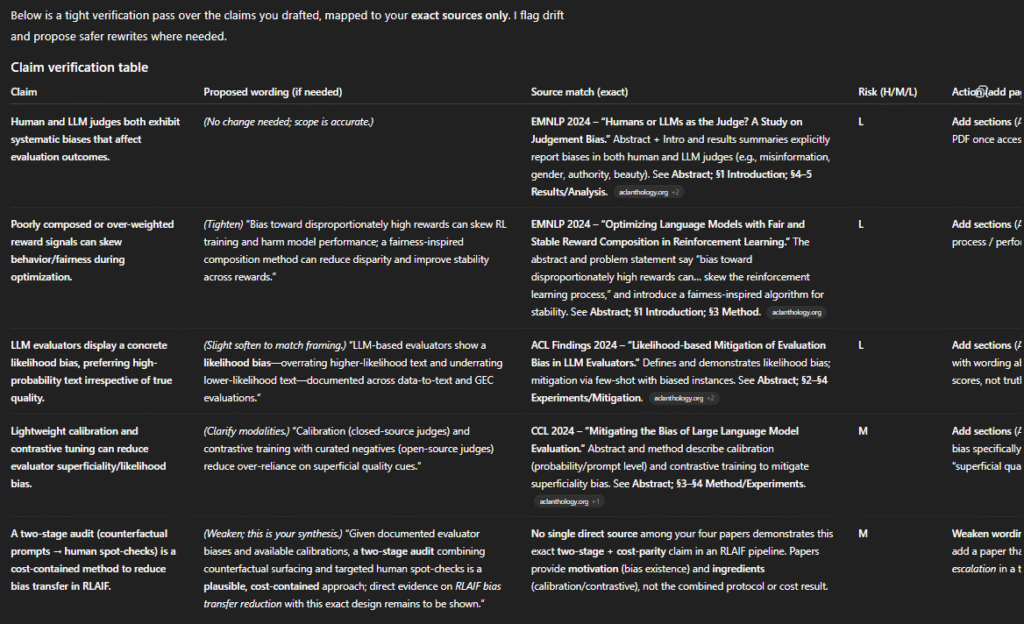

Prompts for verification, and source checks

Verify every claim and evidence with these prompts.

Prompt 1 — Source verifier & citation hygiene

Copy-paste:

“Act as my source verifier for [section/topic]. I’ll paste (a) claims with citation stubs (Author, Year, page/DOI if known) and (b) a reference list I built from real databases.

- Map each claim → exact source in my list; flag any claim with no matching source.

- Check citation drift: if my wording overstates the study, propose a safer rewrite and note pages to cite.

- Output a claim table: Claim | Proposed wording | Source match | Risk (H/M/L) | Action (add page / weaken / replace).

- Suggest stronger replacements only if you can name where to find them (database + search string). Do not invent references.”

Prompt 2 — Anti-hallucination guardrails for literature & data

Copy-paste:

“Be my hallucination guard for [section]. Work only with sources I paste or items with full bibliographic details I can verify.

Tasks:

- Rewrite my draft to remove unverifiable claims; tag any remaining uncertainty as UNCERTAIN.

- Produce a gap list (facts to check) with where to look (database, keywords, author watchlists).

- Provide two safer phrasings for each UNCERTAIN line, and note the evidence needed to restore the stronger version.

- Summarize data provenance for any dataset: owner, version/date, access path, and limitations.”

Prompt 3 — AI-use disclosure, transparency, and reproducibility

Copy-paste:

“Act as my integrity & reproducibility editor. Venue: [course/journal].

- Draft an AI-use disclosure: what AI did (ideation, editing, outline), what I did (analysis, interpretation), and how I verified facts and citations.

- Create a transparency box: software versions, packages, seeds, preregistration links, analysis scripts, and data access statement.

- Write an ethics & bias note: risks, mitigation steps, inclusion/exclusion rationale, and limitations I will acknowledge.

- Provide a replication checklist: folder structure, README items, code/data dictionary, anonymization log.

Keep it concise and accurate—no promises I cannot legally make.”