AI can fill your blank page, but better pick the right large-language model (LLM).

Here, we give you the most reliable LLM ranking based on their writing capability.

We also provide you with a sample analysis across seven writing tasks, for a concrete overview of their writing style.

✨ Meet Muse — The LLM Built for Storytellers

If you’ve ever wished your LLM could write with real emotion, rhythm, and voice – Muse by Sudowrite was created exactly for that. It’s the only AI trained purely on outstanding fiction and creative prose, so your scenes, characters, and dialogue sound vivid and human.

Tested & Recommended by us!

🚀 Try Muse For Free Here*No setup or credit card needed – just open Sudowrite and try Muse.*

How We Ranked the LLM Models

To find the best models for writing, we used two filters: objective data and real-world writing tasks.

First, we looked at the Chatbot Arena

Chatbot Arena is a crowdsourced leaderboard run by LMSYS. It compares large language models (LLMs) based on user preferences across many tasks—including creative writing.

We pulled models from the Creative Writing leaderboard. This gave us a fair starting point: which LLMs perform well when users actually vote on writing quality.

Then, we compared this ranking to their general performance across all tasks.

If a model ranks much higher in writing than overall, it’s a good sign it has a special strength (especially if it’s a smaller model).

Next, we tested them ourselves

We created seven writing tasks that mirror what professionals like you actually do:

- A fictional scene (with tight creative constraints)

- A poem (with meter, imagery, and a twist)

- An SEO blog post (with keyword placement and structure)

- A landing-page copy (with hierarchy and persuasion)

- A short-form essay (with clear thesis and references)

- A scientific research note (with citations and accuracy)

1. Gemini (3 Pro)

Gemini 3 Pro is, in my opinion, the most well-rounded LLM currently available for writers. Its strength lies in precision, reasoning, and detailed outputs, especially for SEO, essay, and research writing.

While its fiction performance isn’t the absolute best, it still delivers compelling narratives with logical coherence and solid storytelling.

With Google’s massive resources behind it, I believe the Gemini family will likely stay a solid choice for writers.

Technical specs

- Context window (input): 1,048,576 tokens (≈1M). Output window: up to 65,535 tokens per response (default cap).

- Hosting options: Vertex AI (Model Garden / API), Google AI Studio (Gemini Developer API), and consumer Gemini app via Google AI Pro/Ultra subscriptions (1M-token context in app tiers).

- Native multimodal inputs (helpful for pulling structure from PDFs, slides, or screenshots)

Leaderboard ranking

It is hitting #1 on Chatbot Arena text leaderboard around launch and on the creative writing leaderoard. On OpenRouter’s live rankings, “Gemini 3 Pro” typically sits among the most-used and highest-rated models (usage rankings fluctuate daily as new variants surge).

Sample analysis

On our tasks, Gemini 3 Pro excelled where structure and clarity mattered most.

- SEO brief → outline (coffee roasting): Gemini consistently produced clean H2/H3 hierarchies, mapped steps to intent, and surfaced entities you actually need to cover. It didn’t dump generic tips; it sequenced actions (charge temp, first crack cues, cooling) and flagged cautions you’d fact-check once. That “procedural sober-mindedness” cut editor rewrites. This lines up with Google’s positioning around reasoning and long-context planning.

- Long-form & research: On argumentative essays and literature-style explainers, Gemini’s strength was synthesis. It grouped claims, then supported them with concise source notes (when required by our prompt kit). The draft sometimes arrived a bit “plain,” but read-aloud clarity was high, and the edit distance stayed low. That’s a trade most content teams will take.

- Creative writing: For voicey fiction and lyrical copy, Claude edged it out in spark and imagery. Gemini still produced coherent scenes and followed constraints, but the prose leaned safe unless we fed stronger style shots. If your brand voice is plain-English and practical, this “safety” becomes a feature. If you need lush metaphor or dialog subtext, you’ll likely pair Gemini with a Claude pass.

Pricing

- Bundled subscriptions: If your team mainly needs drafting inside Google’s apps, Google One / AI plans include access to Gemini Advanced (backed by 3 Pro) for a flat per-user fee. You won’t micro-manage tokens; you’ll work inside Workspace (Docs, Gmail) and related tools.

- Gemini API (AI Studio): Token-based pricing for 3 Pro. As a ballpark, Google lists tiered input/output per-million token rates on its pricing page; check that page before you budget because rates shift. If you’re prototyping prompts or building a small workflow, this is the simplest start.

- Vertex AI (GCP): Enterprise deployment with org-level controls, observability, and quota management. Pricing is also token-based and published on Google Cloud’s Vertex AI page. If you need governance, SSO, and VPC-SC, this tends to be the right lane.

2. Claude (Sonnet 4.5 / Opus 4.5)

The Claude famility with the latest Sonnet 4.5 and Opus 4.5 are definitely the LLMs with the most soul in their writing. They especially excel at creating vivid characters and maintaining a consistent narrative and engaging narrative voice, often more naturally than any other model.

They are less precise in factual and step-by-step tasks than Gemini, but that’s part of they charm : they prioritize creativity and voice over rigidity.

Technical specs (Claude Opus 4.5)

- Context window (input): 200,000 tokens (Vertex AI listing).

- Output window (single response): up to 32,000 tokens (Vertex AI).

- Availability / hosting: Anthropic API, Google Vertex AI, Amazon Bedrock

- Private/local: No local/offline self-hosting; delivered as managed services via Anthropic/API partners. (See partner model docs.)

Technical specs (Claude Sonnet 4.5)

- Context window (input):

- Standard: 200,000 tokens.

- Long-context (beta): 1,000,000 tokens for eligible orgs/tiers; long-context pricing applies to requests >200K input tokens.

- Output window (single response): up to 64,000 tokens

- Availability / hosting: Anthropic API, Vertex AI, Amazon Bedrock; 1M-context availability may be gated by region/tier.

Leaderboard ranking

Opus 4.1 and Sonnet 4.5 also sits at the first place on Chatbot Arena text and creative writing ranking. It is high up on OpenRouter’s usage/ranking lists and shows up prominently in weekly popularity snapshots.

Sample analysis (from our battery)

Here’s how Claude behaved on these exact tasks:

- Creative writing (short story + sonnet). This is where Claude shines. It produced the most natural dialogue, richer imagery, and cleaner rhythm in the Shakespearean sonnet test. The piece read like a human with intentions, not an outline with adjectives. If your brand voice needs narrative lift—scripts, founder letters, campaign stories—start with Sonnet 4.5. When you need more depth or multi-step planning, kick it to Opus 4.1 and then edit for brevity.

- SEO outline + section draft. Claude followed instructions well and stayed on topic, but its default style can get a touch lyrical. For SEO, we tightened the brief (banned phrases, sentence-length targets) and asked for entity coverage and step-by-step how-tos. That brought it in line with what editors need. Expect fewer rewrites if you pin it to concrete actions and examples.

- Argumentative essay + literature-style explainer. Opus 4.1 handled the multi-claim structure and counter-arguments with the most control. It grouped points logically, then stitched transitions that sounded human. When our prompt required source notes below the draft, Opus kept a cleaner separation between cites and prose. For long research posts, Opus → Sonnet is a productive handoff.

- Landing page + social variants. Voice control is strong, but Claude can occasionally over-format (too many bullets or a flourishy opener). Solve this by providing a one-screen style card (tone sliders, banned openers, CTA patterns) and a word budget per block. You’ll get conversion-ready copy on the first pass.

Pricing

- Claude plans (Claude.ai): Free, Pro (~$20/month or ~$17/month annual), Team/Enterprise tiers for higher limits and org controls. These plans give you UI access to Sonnet 4.5 and, on higher tiers, Opus 4.1. Check the pricing page for the latest caps in your region.

- API pricing (per million tokens):

- Sonnet 4.5: ~$3 input / $15 output. Supports prompt caching and batch discounts. Good default for production writing tasks.

- Opus 4.1: ~$15 input / $75 output. Use when you truly need frontier reasoning, then pass back to Sonnet for cheaper iterations. Available via Anthropic API, Amazon Bedrock, and Google Vertex AI.

- Haiku 4.5 (budget option): ~$1 / $5 and very fast; useful for drafts that your editor will heavily rewrite, or for sub-agents.

3. ChatGPT (GPT-5,2)

ChatGPT 5,2 feels like a continuation of OpenAI’s tradition of user-friendly, intelligent writing assistance. Which means it’s still uneven in creativity. It remains highly capable for nonfiction, essay writing, and SEO optimization. Its access to research and retrieval features gives it a strong edge in producing data-rich, well-structured content.

However, in fiction and storytelling, it feels inconsistent. Some stories show glimpses of brilliance, while others lack cohesion or emotional impact. It sometimes overuses literary tropes or produces metaphor-heavy text without clear narrative direction. We’ll definitely miss some of the creative “spark” from GPT-4.5, which had more originality and stylistic diversity.

Technical specs

- Context window (input): up to ~272K input tokens, paired with ~128K output/reasoning tokens for a ~400K total context length on GPT-5 in the API. This is enough to load style guides, golden paragraphs, brief, research notes, and a working outline without constant pruning.

- Output window (single response): up to ~128K tokens on GPT-5. Useful for long drafts, structured briefs, and batch variant generation.

- Private / local: There’s no local/offline self-hosting for GPT-5. You connect via OpenAI’s managed services or approved cloud providers. Use enterprise controls if you need stricter governance; the specs and limits live in the official model docs.

Leaderboard ranking

On Chatbot Arena (LMSYS), GPT-5,2 models sit at the 10th place in the text ranking and 9th for creative writing (lower than GPT-4o and GPT4,5!). On OpenRouter, GPT-5,2 variants appear in top usage/rating cohorts (rankings fluctuate with new releases and category filters).

Sample analysis

Here’s how it worked out :

- SEO brief → outline → section draft: GPT-5,2 consistently produced clear H2/H3 hierarchies, mapped steps to search intent, and surfaced relevant entities. When we enabled ChatGPT Search or Deep Research before drafting, the source notes were easier to verify in editorial. If your pipeline values speed to a grounded first draft, GPT-5,2 is a strong default.

- Argumentative essay & literature-style explainer: GPT-5,2 handled multi-claim structure well, stitched transitions cleanly, and kept a neutral, professional tone. It’s especially effective when you need data-backed copy or a synthesis pass before human polish.

- Creative writing: This is where outputs felt less consistent in our tests. Some stories landed; others leaned on strained metaphors or safe structures. If you need vivid imagery and dialogue, we’d pair GPT-5’s research/structure pass with a creative pass in Claude, then bring the draft back to GPT-5 for fact-check and tightening.

- Editorial friction: GPT-5,2 drafts usually required smaller edits for structure and factuality, with occasional tweaks for voice warmth. If your brand voice is pragmatic and clean, it fits quickly; if you want lyrical punch, supply stronger few-shot examples (golden paragraphs) or run a style-lift pass elsewhere.

Pricing

Pick based on how you’ll use it—UI vs API.

- API (GPT-5 family): Official pricing lists GPT-5 at ~$1.25 per 1M input tokens and ~$10 per 1M output tokens (plus lower-cost Mini/Nano tiers). This is the reference you’ll use for programmatic workloads, prompt caching, and batch jobs. Always confirm current rates on the pricing page before budgeting.

- ChatGPT subscriptions (UI):

- Free: limited access and usage caps.

- Plus: expanded limits and access to advanced tools; listed as $20/month on the public pricing page (regional currency may vary). For most solo creators, this is the easiest start.

- Team / Enterprise: higher limits, admin controls, and governance. Check the live page for SKUs and regional availability; API usage is billed separately from ChatGPT plans.

4. DeepSeek (V3.2-Exp / V3.1 / R1)

DeepSeek V3.1 is the most balanced open-source LLM available. It performs well across many genres – fiction, essays, poetry, and SEO – without being exceptional in just one area.

The open-source nature is a huge advantage: you can fine-tune, host locally, and customize your own filters, offering independence from corporate ecosystems. That makes it particularly appealing for writers who value control, transparency, or want to experiment with model tuning.

Technical specs

- Context window (input):

- DeepSeek-V3.2-Exp (API): 128K tokens. Max output: 4K by default, up to 8K on the non-thinking chat model; the thinking (“reasoner”) path supports 32K default / 64K max output.

- DeepSeek-V3.1 (providers): commonly exposed with ~164K context; exact limits vary by host.

- Open-weight checkpoints (V3 / R1 / V3.1): context depends on the build you run and the serving stack. Start from the model card and your inference framework’s limits.

- Private/local LLM:

- Yes (open-weight): You can self-host the V3 / R1 / V3.1 checkpoints for full data control and fine-tuning.

- Managed options: R1 and V3 variants are also available on major clouds and model gateways if you want governance without running GPUs yourself.

- Speed / responsiveness:

- On the API, generation is competitive and benefits from prompt caching (see pricing below). On local runs, throughput depends on your quantization, batch size, and GPU VRAM. (DeepSeek publishes optimized kernels and infra repos that help you squeeze latency.) a

- Modes you’ll use:

- Chat (V3.x) for general writing with tool use.

- Reasoner (R1 / V3.x “thinking” modes) for deeper planning and multi-step tasks. V3.1 adds a hybrid switch between thinking and non-thinking via chat template—handy when you only want “thinking” on hard passages.

Leaderboard ranking

- On LMSYS Chatbot Arena, DeepSeek-R1 sits in the leading pack across overall and creative categorie

- On OpenRouter, DeepSeek V3 / V3.1 are consistently in the high-usage cohort among open models

- In head-to-heads, Qwen 2.5-Max sometimes edges DeepSeek on certain reasoning and arena scores—but DeepSeek remains the most widely adopted open writing option because of its quality-per-cost profile.

Sample analysis (from our battery)

You want a model that’s “good at everything” and easy to control. That’s the DeepSeek vibe.

- SEO brief → outline → section draft: DeepSeek produced solid, step-wise structure and didn’t wander. It needed light voice coaching to avoid generic phrasing, but entity coverage and task sequencing were dependable. If you standardize your prompt kit (voice sliders, banned phrases), it drafts clean copy with low edit distance.

- Argumentative essay & literature-style explainer: With thinking enabled, DeepSeek grouped claims sensibly and kept transitions tight. Source notes were usable; you’ll still run a human verification pass, but the scaffolding held up.

- Creative writing (short story + sonnet): R1/V3.1 turned in better-than-expected fiction for an open model. The sonnet held meter and rhyme convincingly; the short story was coherent and occasionally inventive, if a bit conservative in imagery compared to Claude. If you want more color, add stronger few-shot style paragraphs.

- Landing page + social variants: Good clarity; sometimes list-heavy. We curbed over-formatting and emoji drift with a one-screen style card and stricter word budgets.

Pricing

- Official API pricing (V3.2-Exp):

- Input: $0.28 per 1M tokens ($0.028 per 1M with cache hit)

- Output: $0.42 per 1M tokens

- Context: 128K; output caps noted above (8K chat; 64K reasoner).

These rates are listed on DeepSeek’s own pricing page.

- Third-party hosts: Some providers advertise ~164K context for V3.1 and similarly low prices (sometimes even lower promotional rates). Always validate context and limits per host before you budget.

- Open-weight (self-hosted): Model files are free to download; your costs are compute + ops. If you already own GPUs (or rent spot instances), this can beat API pricing for heavy throughput—at the expense of MLOps overhead. Start with the official repos and kernels.

5. Grok (Grok 4 / Grok 4 Fast)

Grok 4 is the most provocative and personality-driven model in this lineup. It’s less censored and more daring in tone. The model also demonstrates solid reasoning and factual integration, particularly in essays and op-eds.

However, Grok’s creativity can feel unpredictable: sometimes brilliant, sometimes unpolished. The same “freedom” that makes it interesting also makes it less consistent. It’s ideal for writers who want to explore controversial, unconventional, or highly individualistic tones.

Technical specs

- Context window (input): Grok 4 supports ~256K tokens. Grok 4 Fast expands this to ~2M tokens (two variants: reasoning and non-reasoning). If you pad briefs with long research notes or transcripts, this headroom is handy.

- Output window: Matches the above limits by request; long responses stream. (Reasoning tokens—when exposed via gateways—count as output.)

- Private/local: No local/offline build. You access Grok via xAI’s API or supported gateways; real-time X (Twitter) search is native to Grok’s consumer apps and can be toggled in product.

- Speed: Grok 4 Fast is the throughput play (two SKUs: reasoning and non-reasoning) with the 2M context. Use Fast when you need quick iterations or you’re summarizing big corpora.

- Data posture / vibe: Grok leans into “spicier” answers and real-time feeds from X, which can yield fresh angles—and requires your editorial guardrails.

Leaderboard ranking

Grok 4 is listed as the 11th on the Chatbot Arena text ranking and 8th on the creative writing ranking

Sample analysis (from our battery)

You asked how it writes, not just how big its context is.

- Creative writing: Grok was the outlier in a useful way. It took bolder swings—surprising settings or references—and occasionally reached for contrarian angles in theme and character. In your words: “edgy vibe.” That fits founder letters, narrative ads, or essays where you want a POV. You’ll still copy-edit for brand safety, but the voicey baseline is valuable. (This aligns with how xAI markets Grok’s tone and real-time culture feed.)

- Essays / op-eds: Grok tended to pull in alternative or historical references that other models didn’t (e.g., economists or older debates). That creates texture. Your editor should verify quotes and attributions before publishing—real-time pulls can be messy.

- SEO outline → section draft: “Fine, not the best” is fair. Grok followed the brief and hit entities, but it sometimes preferred provocative framing over steady step-by-step how-to. If your brand is practical and minimal, keep your style card tight (banned metaphors, sentence-length targets, exact H2/H3 count) and it behaves.

- Fact pattern & sourcing: When Grok’s real-time search is on, you’ll get fresher mentions—plus the occasional non-authoritative source. We work around this with our source-notes-below-the-line rule and a human fact-check pass before anything ships.

Pricing

- xAI API (list pricing):

- Grok 4: listed at $3.00 / 1M input tokens and $15.00 / 1M output tokens; 256K context. Pricing steps up for very large requests.

- Grok 4 Fast (reasoning & non-reasoning): 2M context with low rates shown as $0.20–$0.50 per million tokens (SKU-dependent). Designed for throughput-heavy use.

- Consumer access (apps/subscriptions): Grok 4 is available in tiers on X (Premium/Premium+, SuperGrok, SuperGrok Heavy), with the flagship models unlocked at higher tiers; xAI’s site and updates note availability and positioning. Use these plans if your team mostly writes inside the Grok app.

6. Qwen (Qwen 2.5-Max / Qwen 3)

Qwen 2.3 is a strong contender in the open-source category compared to DeepSeek.

I find it particularly good for essays, literature reviews, and structured nonfiction. The model’s outputs are often detailed and well-organized, though sometimes overly formatted. In creative writing, it performs decently but lacks the narrative instinct of Claude.

Technical specs

- Context window (input). There are two tracks to know:

- Open-weight releases. Qwen shipped open models with up to 1M-token context (e.g., Qwen2.5-7B/14B-Instruct-1M), so you can self-host long-context drafting without a vendor lock.

- Hosted/API SKUs. Specs vary by provider. On OpenRouter, Qwen-Max (based on Qwen 2.5) lists ~32K context. On Alibaba Cloud’s Model Studio, the Qwen Plus / 2.5 family offers tiered pricing up to 1M input tokens per request (with separate “thinking” vs “non-thinking” modes). Check which SKU you’re actually calling before you paste a megadoc.

- Output window. Output caps follow the SKU (and “thinking”/reasoning mode). Alibaba documents separate prices/tiers for non-thinking vs thinking generations as context grows (≤128K, ≤256K, up to 1M).

- Private / local. Qwen maintains official open-weight repos (Hugging Face, GitHub). You can self-host and fine-tune, or run managed via Alibaba Cloud. This is ideal if you want EU-style data posture or on-prem control.

- Speed / modes. Expect faster, lighter SKUs for drafting and reasoning (“thinking”) modes for deeper planning. As with other MoE models, throughput depends on the host and your quantization/batch choices when self-hosting.

Leaderboard ranking

Qwen variants always appear just behind DeepSeek in the Chatbot Arenatext and creative writing ranking.

Sample analysis

Here’s the practical read:

- Essays & research-heavy non-fiction (strong). Qwen’s reasoning modes delivered clear claim grouping, supporting examples, and orderly transitions. On literature-style explainers, it stacked citations neatly when we enforced “source notes below the draft.” If your calendar leans into white-papers, explainers, and thought-leadership, Qwen held its own with DeepSeek.

- SEO brief → outline → section. Out of the box, Qwen followed structure and entity coverage well. It sometimes over-formatted or leaned into emoji in landing-page copy. We fixed that with a one-screen style card: banned emoji, word budgets, exact H2/H3 counts, and “no bullet walls.” With those rails, the drafts were clean and fast to edit—very workable for production.

- Creative writing. Competent and occasionally inventive, but not as lyrical as Claude and not as naturally “voicey” as Grok. The short story scenes were coherent and on-brief; the Shakespearean sonnet was solid on rhyme/meter. If you want more color, add stronger few-shot style paragraphs or route a final punch-up to a creative-first model.

Pricing

Budget depends on where you run it.

- Alibaba Cloud Model Studio (API). Qwen Plus/2.5 family uses tiered pricing by context band with separate rates for non-thinking and thinking generations. Example bands documented today: ≤128K, ≤256K, and (256K, 1M] inputs—with per-million token rates scaling at each tier. This is your reference if you’re deploying inside Alibaba Cloud.

- OpenRouter (hosted). Qwen-Max lists around $1.60/M input and $6.40/M output with ~32K context (providers may vary). Good for quick pilots and prompt testing across vendors.

- Self-hosted (open-weight). Model files are free; you pay compute + ops. If you already have GPUs (or rent spot instances), self-hosting the 1M-context open releases can be cost-effective at volume—provided you’re ready for MLOps work.

7. Mistral (Medium 3 / Large 2.1)

Mistral is Europe’s most dependable open-source LLM.

It’s ideal for writers and organizations who prefer European data handling standards or want to keep content local. In terms of writing, it’s middle-of-the-pack: structured, predictable, and clear, though occasionally rigid or formulaic.

Fiction from Mistral can feel a bit mechanical, with less emotional nuance than Claude or DeepSeek. However, its nonfiction and SEO writing are reliable, consistent, and well-structured.

Mistral’s main strength lies in its openness — you can fine-tune it, host it privately, and adapt it to your exact workflow.

Technical specs

- Mistral Medium 3: ~128k tokens (multimodal; text+vision). This is the current general-purpose flagship Mistral pushes for enterprise use in 2025.

- Mistral Large 2.1: also documented at ~128k on partner listings. Treat Large as the “heavier” alternative if you’ve standardized on it.

- Codestral 2 (code-first): 32k context on Vertex AI (GA 2025-10-16). Use it when drafting or refactoring code in docs/tutorials.

- Output window: Long, streamed generations; practically chapter-length. In production, you’ll still cap by section for editorial control. (Vendors present output as part of the same 128k budget.)

- Private/local options: Mistral continues to publish open weights with self-deployment guides (vLLM, etc.). If you need EU posture, VPC isolation, or on-prem testing, this is a key reason teams pick Mistral.

- Where you’ll run it: Direct via La Plateforme / Le Chat, or via partner clouds/marketplaces (Vertex, Azure).

Leaderboard ranking

On public preference boards, Mistral models generally sit mid-pack—often outside the top 10 but well within the top 20 for text-only arenas, with placement shifting as new releases land. OpenRouter product pages show usage/popularity snapshots for Mistral Medium 3 across hosts.

Sample analysis (from our battery)

You want to know where Mistral actually helps you ship:

- SEO outline → section draft (strong enough). Mistral delivered clear H2/H3s, good on-page entity coverage, and sensible step-by-step structure. It isn’t the most inventive drafter, but it rarely wandered. For production SEO, that’s fine: editors care more about specificity and instruction following than purple prose.

- Argumentative essay / explainers (solid structure, sober tone). On essays and literature-style explainers, Mistral grouped claims cleanly and kept transitions tidy. Compared with top “reasoning” flagships, it can feel a bit procedural—but if your brand voice is plain-English and practical, that lands well.

- Creative writing (just okay). Your take matches ours: usable, not the most subtle. Dialogue and imagery are competent, but the voice leans safe and occasionally over-formatted (listiness). If you need lyrical lift, run a second pass in Claude and bring the draft back to Mistral for tighten + fact-check.

- Landing page + social variants (watch formatting). Mistral sometimes overuses bullets or, depending on the SKU/prompt, sprinkles emoji. Solve this with a one-screen style card: banned emoji, max bullet count, sentence-length targets, and fixed H2/H3 quotas. Once clamped, it ships clean copy with low edit distance.

Pricing

Mistral’s pricing is simple and predictable—and competitive for a “premier” model.

- Mistral Large 24.11 (API). Public listings show $2.00 per 1M input tokens and $6.00 per 1M output tokens, with ~128K context. That’s your go-to for long, structured articles and editorial workflows.

- Mixtral 8x22B Instruct (MoE). Often the value play: commonly listed around $0.90/M for input and output on some hosts, with variations by provider. If you’re cost-sensitive and can accept a small quality drop versus Large, this SKU is attractive for bulk SEO.

- Official pricing pages. Mistral’s own pricing and models overview document plans, SKUs, and Le Chat tiers. Always confirm live rates before you commit a budget.

- Le Chat (app). If your writers prefer an app over APIs, Le Chat offers free and paid tiers with enterprise options; billing for API usage is separate from app subscriptions. Good for teams that want drafting inside a managed UI with EU posture.

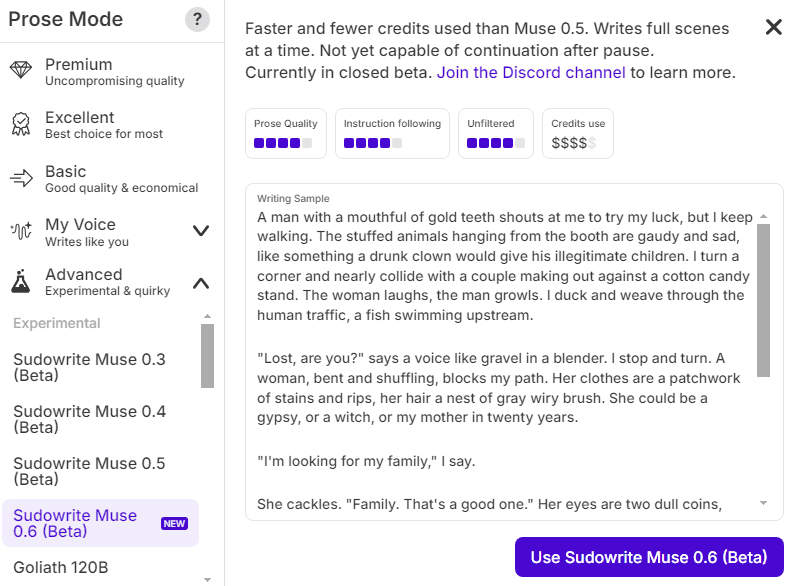

8. Muse 1.5(Sudowrite)

Muse is the only LLM purpose-built for fiction. Sudowrite’s “narrative engineering” pipeline helps it plan, revise, and stick to the brief, so chapters don’t meander as much as they can with Claude or GPT when you push for length. It’s also intentionally less filtered about adult themes and violence, which matters if you write darker genres.

Weak spots: it’s exclusive to Sudowrite, so you don’t get open routing freedom; internal consistency can still lag behind Claude on very intricate lore; and for hard factual or SEO work, Gemini/ChatGPT remain stronger.

TRY OUT MUSE LLM FOR FREE HERE

Technical specs

- Context window (input). Sudowrite doesn’t publish a token count for Muse. Practically, you work at the scene/chapter level inside Sudowrite’s Draft/Write tools, with streaming output and tools (Expand, Rewrite) that keep the active context focused. If you need hard token limits, Muse isn’t marketed that way; it’s positioned as a fiction workflow rather than a general API model.

- Output window. Generations stream and can be extended (“continue”) inside Draft/Write. Muse 1.5 specifically advertises longer scenes and tighter instruction following compared with earlier builds.

- Private/local. No self-hosting. Muse is exclusive to Sudowrite (web app). Sudowrite states your work isn’t used to train Muse and emphasizes an ethically consented fiction dataset. If your priority is author-friendly data promises inside a hosted tool, this is the draw.

- Filters and tone. Muse is marketed as “most unfiltered” on Sudowrite (can handle adult themes/violence) and as actively de-cliché’d during training. Good to know if you write darker genres—and equally important if you need to keep drafts brand-safe.

Leaderboard ranking

Muse is a vertical private model—aimed at fiction—so you won’t find it atop general LLM leaderboards.

Sample analysis

You asked how it writes—not just how it’s marketed. We ran the same tasks we give other models, but we judged Muse primarily on fiction-first duties and then checked its utility for marketing copy.

- Short story scene (dialogue + sensory detail). Muse produced the most human-sounding dialogue in our set. Beats landed naturally, stage business felt intentional, and the prose avoided the obvious “AI sheen” (stock metaphors, repetitive cadence). Its default voice was vivid without purple excess, and it responded well to style examples (few paragraphs of your voice). That lines up with Sudowrite’s claim that Muse is engineered to reduce clichés.

- Shakespearean sonnet. Surprisingly disciplined. Rhyme and meter held while still carrying imagery—comparable to top closed models in our test. Muse 1.5’s “longer scenes” refinement also showed up here as steadier stanza control across a full poem.

- Argumentative/essay drafts. Serviceable, but this isn’t Muse’s sweet spot. It can structure an argument and keep tone consistent, yet it won’t out-reason frontier generalists. If your calendar is essays and thought-leadership, you’ll still prefer Gemini/GPT/Claude for scaffolding, then bring passages into Sudowrite for voice work.

- SEO/landing page. Muse will generate clean paragraphs and punchy lines, but it’s not built for entity coverage, SERP mapping, or rigid H2/H3 scaffolds by default. If you’re a novelist-marketer, this is a great “voice pass” after a procedural model sets the structure.

- Control & safety. Because Muse is “less filtered,” we recommend a simple brand-safety card (banned themes/phrases, tone sliders) when you use it for public-facing copy. For fiction, that openness is a feature; for marketing, set rails.

Pricing

Sudowrite sells access to Muse via credit-based subscriptions (browser app). Tiers (monthly billing shown on the live pricing page):

- Hobby & Student — $10/mo for ~225,000 credits/month.

- Professional — $22/mo (page shows 450,000 and 1,000,000 credits copy; treat 1,000,000/month as the current headline included amount on the page).

- Max — $44/mo for ~2,000,000 credits/month with 12-month rollover of unused credits.

All tiers include the Sudowrite app; Muse is selectable as the default model in Draft/Write. Always confirm the current inclusions and yearly discounts on the pricing page before budgeting.