With advanced language models like ChatGPT, the line between human and machine writing is more blurred than ever.

So, how can you tell if a piece of content was generated by AI, and how reliable is this detection?

In this article, we’ll explore the signs that can help you identify AI content, and their reliability.

Is ChatGPT Writing Detectable?

Yes, there are certain indicators that might suggest content is ChatGPT-generated, but determining this with complete certainty can be tricky.

In fact, studies have shown that even trained educators can sometimes misjudge whether student work is AI-generated or not. This proves that while there are clues, the human ability to detect AI writing is far from perfect.

One of the biggest challenges is that people can easily edit ChatGPT’s output to make it less detectable. A few changes to phrasing, tone, or structure can make AI-generated content feel more human, blending it seamlessly with other types of written work.

Another complicating factor is the variability in how ChatGPT responds to different prompts. Depending on the input, the AI can generate content that ranges from highly generic to surprisingly nuanced, which makes it challenging to set consistent rules for detection.

Ultimately, while it’s possible to make educated guesses about whether content is AI-generated, you can never be 100% certain. There are tools available to help with detection, but even these have limitations. They often struggle with accuracy, particularly when content has been deliberately modified to avoid detection.

Signs of ChatGPT-Generated Writing

Even though detecting ChatGPT-generated content is difficult, there are still some signs that can make it more likely a piece of writing was produced by AI:

1. Passive and Impersonal Tone

ChatGPT often uses a passive and detached voice, which can make the writing feel less engaging. You might notice that the text lacks first-person or second-person pronouns like “I,” “you,” or “we,” which human writers frequently use to create a conversational and relatable tone.

This impersonal style can be a telltale sign that the content was generated by an AI.

2. Lack of Originality and Nuance

AI-generated content frequently provides generic information without much depth. ChatGPT often relies on widely known facts and may fail to offer the unique insights or perspectives that a human writer might bring.

When reading text that seems broad, lacking nuance, or avoiding specific examples, it could be an indicator that it was produced by an AI.

3. Repetitive Structures and Patterns

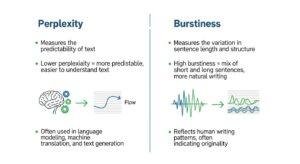

One common characteristic of AI-generated content is a repetitive structure. ChatGPT tends to produce similar sentence lengths and predictable paragraph organization. Unlike human-written content, which usually features a dynamic flow with varied sentence structures and pacing, AI content can feel monotonous.

Additionally, AI often reiterates points without adding new value, making the content seem repetitive and less straightforward.

4. Absence of Personal Anecdotes

Human writers often use anecdotes or personal examples to make their content relatable and engaging. ChatGPT, however, lacks personal experiences and, therefore, rarely includes these kinds of stories.

If the text feels impersonal and lacks the kind of personal touch that usually makes writing more relatable, this could indicate AI involvement.

Are AI Detectors Reliable?

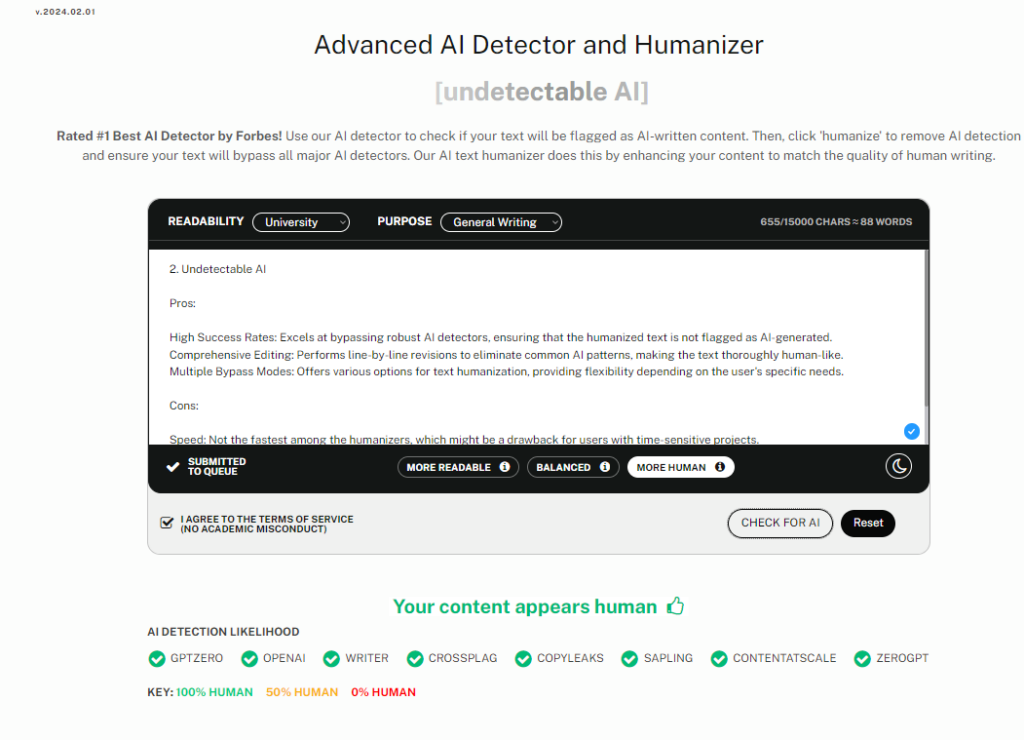

When it comes to identifying AI-generated content, AI detectors are one of the main tools available. However, relying solely on tools like Originality.AI, CopyLeaks …etc to verify the authenticity of content has its limitations :

- These detectors often struggle with accuracy when they encounter content that has been deliberately modified to evade detection. In some cases, accuracy rates for detecting AI-generated content have dropped as low as 17.4%, which is far from reliable.

- Another significant issue with AI detectors is their tendency to display bias. Research has demonstrated that content written by non-native English speakers is more likely to be flagged as AI-generated, even when it’s completely human-written. This bias can lead to unfair judgments, especially in academic or professional settings.

- AI detectors are also often inconsistent in their judgments. Sometimes, these tools give an “uncertain” response, indicating they can’t definitively say whether the content is human or AI-generated. This inconsistency leads to both false positives and false negatives, making the tools less trustworthy.

- AI detectors also lose signficant accuracy with each new LLM model releases. The rapid advancement of large language models means that detection methods must constantly adapt to new versions. For example, there’s a noticeable difference in detection accuracy between older models like GPT-3.5 and more advanced ones like GPT-4o or Claude 3.5.

- Moreover, more formal and structured writing styles are more likely to be flagged as AI-generated. This is why some detection tools, such as Originality.AI, advise users to focus on more informal writing styles to avoid false flags.

How to Tell If It’s ChatGPT Writing

Detecting AI-generated writing is a complex task, and while there are tools and signs to help, they are far from foolproof. If you suspect that a piece of content was generated by ChatGPT, here are some practical steps you can take to evaluate it :

- First, understand the limitations of AI detection. AI-generated content can be manipulated to evade detection, which means that even the best tools available may not be able to accurately identify it. Edited AI output can closely resemble human writing, especially if the changes are made to adjust phrasing, tone, or structure. Therefore, be cautious when making a definitive judgment, especially in high-stakes environments like academic settings or professional publishing.

- Next, look for the common signs of AI writing. As discussed earlier, passive tone, lack of originality, repetitive structures, and the absence of personal anecdotes are common markers. Evaluate whether the content lacks the depth or unique perspective that you would expect from a human writer. If the writing feels too broad, avoids specifics, or repeats the same points without adding new value, it could indicate AI involvement. However, none of these signs alone are definitive proof.

- Given these limitations, ensuring transparency in your assessment. If you are using AI detection tools to make a judgment, be clear about which tools you are using and what criteria you are relying on.

So here’s the takeaway : there’s some signs when a piece is AI-generated, but know that it’s not 100% sure.