Imagine trying to read 14 new papers every single day. That’s what it would take to keep pace with the 5.14 million scholarly articles published in 2024 alone.

That’s where AI comes in.

In this guide cuts, you’ll see eight AI platforms evaluated on three things that matter: their core features, the quality of the review they deliver, and what they cost you or your lab.

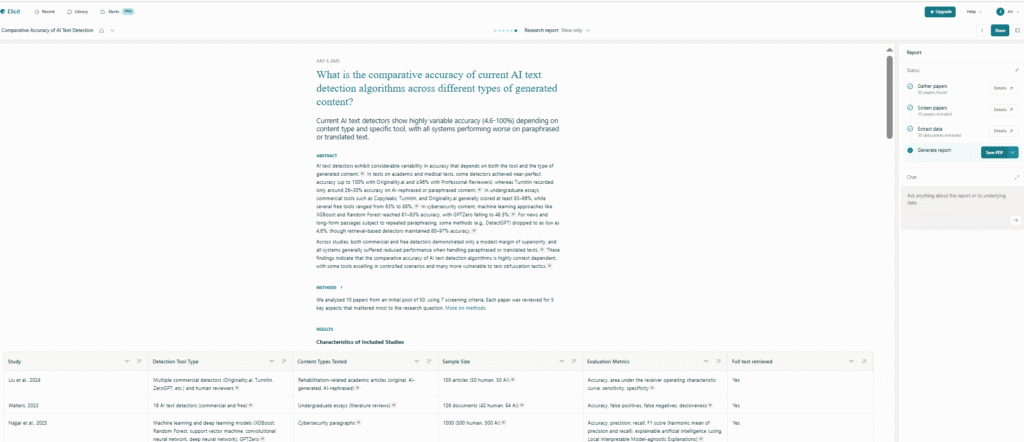

1. Elicit — The Question‑First Research Assistant

Elicit is one of the rare AI tools designed from the ground up for literature reviews. Instead of throwing you a keyword box, it starts with your research question and hunts across 125 million papers for the best answers.

Main Features — What You Get

- Question‑based semantic search – Paste a plain‑language question and Elicit ranks the studies most likely to answer it. No Boolean syntax needed.

- Instant paper tables – Summarises abstracts into a matrix of population, intervention, outcome, and methodology so you can scan evidence at a glance.

- Guided systematic‑review workflow – New 2025 flow walks you through search, title/abstract screening, full‑text screening, and data extraction, then exports everything to CSV.

- PDF data extraction – Pull numeric results or qualitative quotes into structured columns; high‑accuracy mode available on paid tiers.

- Reference‑manager links – One‑click export to Zotero, RIS, or BibTeX keeps your citations tidy.

- Team collaboration – Pro and Team plans let multiple reviewers live‑edit decisions and track progress in real time.

Quality of the Review — Strengths and Limits

Independent studies show Elicit can cut systematic‑review time by up to 80 % without major loss of rigor. A 2025 BMC Methods paper found Elicit recovered 3 of 6 key studies missed by a traditional umbrella review yet still missed 14 others, underscoring that human screening remains essential.

Where Elicit shines

- Broad recall across disciplines – Semantic search surfaces papers that don’t share obvious keywords, reducing blind spots.

- Transparent reasoning – Every AI‑generated answer includes direct quotes, so you can verify context fast.

Where you still need caution

- Bias toward well‑indexed journals – Niche or very recent work can slip through because of limited database coverage.

- Reproducibility variance – Repeat runs on the same query returned 169–246 hits in one test, so lock your queries for audit trails.

Pricing — What It Costs to Deploy

| Plan | Monthly cost | Key limits |

|---|---|---|

| Basic (Free) | $0 | Unlimited search, 4 paper summaries, 20 PDF extractions |

| Plus | $12 | 8 summaries, 50 PDF extractions, five‑column tables |

| Pro | $49 | Full systematic‑review flow, 200 PDF extractions, unlimited high‑accuracy columns |

| Team | Quote | 300 PDF extractions, shared projects, admin panel |

All tiers let you buy extra credits at about $1 per 1 000 tokens when you hit extraction caps, so you only pay up when workload spikes.

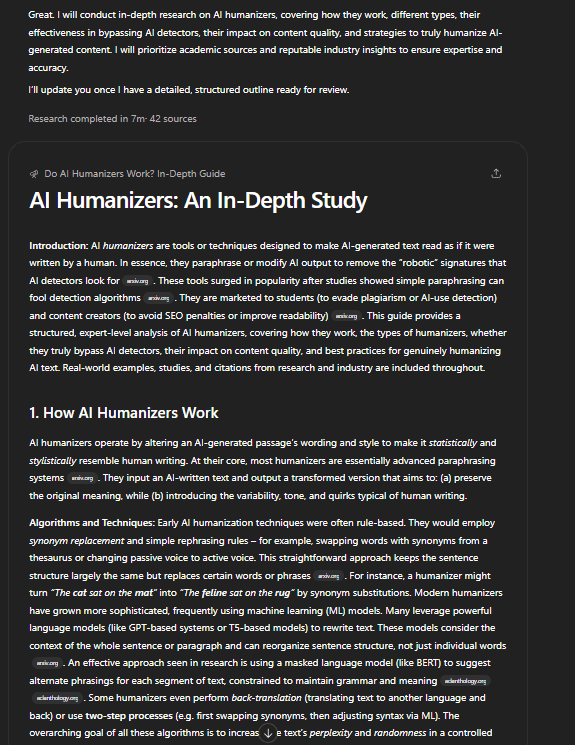

2. ChatGPT, Gemini, Perplexity Deep Research

ChatGPT, Gemini, Perplexity all provide agent-style web researchers that plan searches, read sources, and hand you a fully-cited report.

Key Features :

- ChatGPT Deep Research

- Autonomous workflow: once you choose the Deep Research model, ChatGPT breaks your prompt into subtasks, issues dozens of live web queries, reads the pages, and refines its plan until it can draft a multi-page report. The whole process is transparent—you can expand a side-panel to see the chain-of-thought and every URL it opened.

- Rich inputs & outputs: you can attach PDFs, images, or spreadsheets; the agent reads them alongside its web finds, weaves data tables or charts into the final write-up, and footnotes every claim.

- Timing & scale: simple topics finish in ≈5 minutes; deep industry or policy reports may take 30 minutes, but still compress hours of human work.

- Gemini Deep Research

- Interactive plan-builder: before it starts, Gemini 2.5 Pro shows you an editable research outline; you can add or remove sub-questions, then watch a live “thoughts” stream as the agent browses hundreds of sites.

- Multimodal reasoning: besides web pages, Gemini accepts images, large codebases, and up to 1 million tokens of text—so you can drop a 1 500-page industry report in and ask it to cross-check fresh sources.

- Canvas & export: results arrive as a scrollable report plus an interactive Canvas you can reorganize, convert into mind-maps, or copy straight into Docs/Slides.

- Perplexity Deep Research

- Search-and-code loop: the agent iteratively googles, runs snippets of Python when helpful (e.g., quick stats from tables), ranks evidence, and writes a narrative answer with inline footnotes that expand to full citations.

- Fast export & sharing: when finished (usually 2-4 minutes), you can export to PDF or turn the output into a public Perplexity Page for colleagues.

- Spaces & follow-ups: store a research session in a “Space,” reopen it later, and ask follow-up questions that build on the same evidence graph—handy for living literature reviews.

Underlying Model :

- ChatGPT – Runs on OpenAI o3 (Plus/Team) or o3-pro (Pro/Enterprise). o3 is a GPT-4-class successor tuned for web navigation and long-context reasoning; o3-pro adds extra context and tool-use layers.

- Gemini – Uses Gemini 2.5 Pro (1 M token window) for Deep Research; the Mixture-of-Experts architecture lets the agent call specialist “experts” for reasoning, planning, and multimodal analysis.

- Perplexity – Orchestrates external LLMs (GPT-4o, Claude 3.7, Gemini Flash) behind its own retrieval-and-reasoning stack; this hybrid scored 21.1 % on the tough Humanity’s Last Exam benchmark.

Pricing & Usage Limits :

| Plan | ChatGPT Deep Research | Gemini Deep Research | Perplexity Deep Research |

|---|---|---|---|

| Free tier | Not available | Free preview (daily cap) | 5 runs / day |

| Entry | Plus $20/mo → 10 runs / mo | Google One AI Premium $19.99/mo → unlimited personal use | Pro $20/mo → 300 runs / day |

| Power | Pro $200/mo → 120 runs / mo, longer contexts | — | Max $50/mo → unlimited runs, API credits |

Performance & User Reviews :

- ChatGPT Deep Research

- Tech journalists found the reports “worth the 30-minute wait,” praising the clarity of citations but noting occasional reliance on Wikipedia or weaker sources that need human vetting.

- Benchmark lead: 26.6 % on Humanity’s Last Exam—currently the highest published score for autonomous research agents.

- Gemini Deep Research

- TechRadar applauds Gemini’s free access and real-time thought stream, calling it “great for casual queries,” but testers say it can “over-think” niche engineering problems and still lags ChatGPT on numerical accuracy.

- Strengths lie in multimodal prompts (pictures of lab setups, raw CSVs) and the giant context window, which reviewers say “lets you paste an entire dissertation and ask follow-ups in seconds.”

- Perplexity Deep Research

- Researchers love the speed and footnoted answers—Tom’s Guide’s month-long test concluded it’s “a compelling Google replacement for learning-heavy tasks.”

- Community feedback on Reddit highlights its transparency but notes newer updates sometimes run slower or over-include marginal sources; still, most users find accuracy solid for literature sweeps.

- On benchmarks, Perplexity’s 21.1 % HLE score beats Gemini Thinking and open-source rivals, though it trails ChatGPT’s agent.

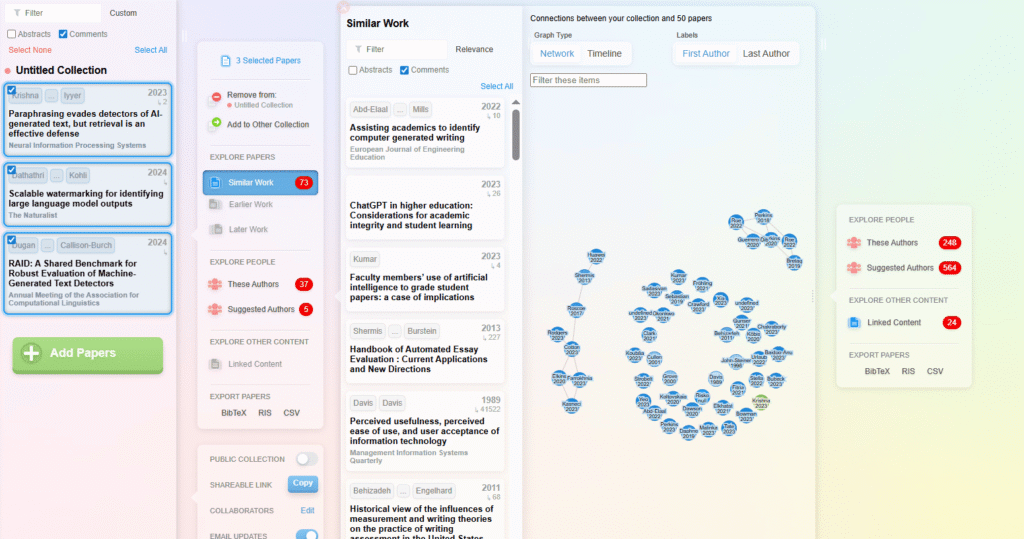

3. ResearchRabbit — Your Visual Map for Fast Paper Discovery

Instead of static search results, ResearchRabbit builds live networks of citations, co‑authors, and topics so you can jump from one pivotal study to the next in seconds. That visual approach makes it easy for you to trace ideas and unexpected connections.

Main Features — What You Can Do

- Interactive citation graphs — Drop one paper (or an entire folder) into the workspace and watch a force‑directed map show parent, child, and sibling papers. You can filter by year, author, or relevance and save any node to a collection.

- Author and topic networks — Toggle to “Authors” to see who collaborates with whom, or switch to “Concepts” to trace jargon across sub‑fields. Great when you’re learning a new domain from scratch.

- Personalised recommendations — The more papers you add to a collection, the sharper the AI becomes at serving fresh, related work. Weekly email alerts keep you updated without manual searches.

- Collaboration and sharing — Invite co‑authors or students, leave comments, and build a shared reading list in real time. Useful for lab groups chasing the same research question.

- One‑click export — Push selected papers straight to Zotero or EndNote, so citations stay consistent across your writing stack.

These features mean you spend minutes— not days— locating clusters of relevant studies.

Quality of the Review — Strengths and Watch‑outs

ResearchRabbit shines when you need breadth fast. Visual maps help you see seminal papers and fringe offshoots at a glance, which is perfect for the exploratory stage of a literature review. In a 2025 methods study, researchers shaved 60 % off their initial scanning time compared with a traditional keyword search.

But there’s a catch: the platform leans on the Microsoft Academic Graph, which stopped updating in 2021. That means very recent pre‑prints or niche journals can slip through the net.

Users also report occasional “recommendation loops,” where the tool keeps surfacing the same cluster of papers. The fix is simple: seed your collections with a wider variety of sources or cull redundant nodes.

Pricing — What It Will Cost You

For now, ResearchRabbit is 100 % free for individual researchers, students, and small teams. There are no paywalls on graph generation, sharing, or alerts. Institutions can request enterprise‑level access, but the core experience costs you nothing—an unbeatable ROI if you’re bootstrapping a review.

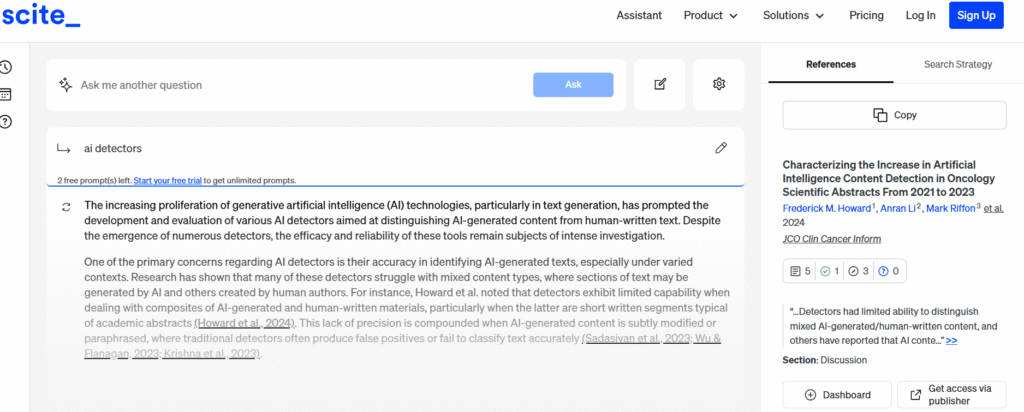

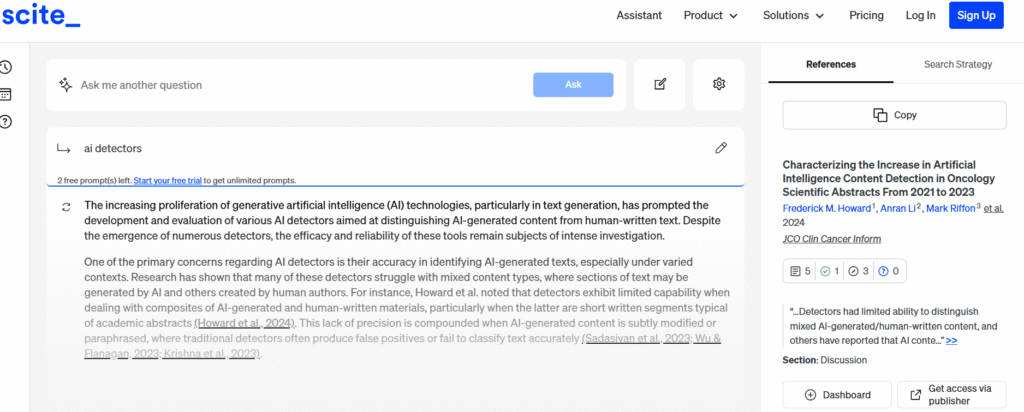

4. Scite Assistant — Fact‑Checking Your Sources in Real Time

Scite flips the usual literature‑search model on its head. Rather than counting raw citations, it shows how each study is cited—does a later paper support, contradict, or just mention it? That nuance gives you instant quality control while you build your review.

Main Features — What You Can Do

- Smart Citations – Every result carries three simple tags: supports, contrasts, or mentions. You see at a glance whether the community agrees with a claim or challenges it.

- AI Research Assistant – Ask plain‑language questions and get ranked answers with citation contexts included. It works inside your browser, so you never leave the workspace.

- Reference & Retraction Checks – Paste a bibliography, and Scite flags retracted or heavily disputed papers before they slip into your draft.

- Data‑Extraction Tables – New “Tables” mode pulls numbers and key phrases from PDFs into structured rows, saving you from manual copy‑paste.

- Citation Alerts & Dashboards – Set custom alerts for new supporting or contrasting evidence and track paper impact over time.

Quality of the Review — Strengths and Watch‑outs

Scite’s biggest win is credibility filtering. Instead of skimming dozens of abstracts, you jump straight to the citations that debate your research question. Users report cutting screening time by half, especially when vetting hot topics flooded with low‑quality studies.

Because the platform now tracks over 1.2 billion citation statements, coverage is broad across STEM and social science fields. However, grey literature and very new pre‑prints can lag a few weeks. Always cross‑check arXiv or medRxiv if your field moves fast.

Pricing — What It Will Cost You

Scite keeps entry low. You start with a seven‑day free trial that unlocks all tools. After that:

- Personal plan costs about $12 per month when billed annually. That includes unlimited assistant chats, Smart Citations, and reference checks.

- Institutional licenses scale to lab or campus size and add centralized billing plus custom onboarding. Pricing is by quote, so budget teams can negotiate.

If you cancel, your saved projects remain view‑only, so you never lose the audit trail.

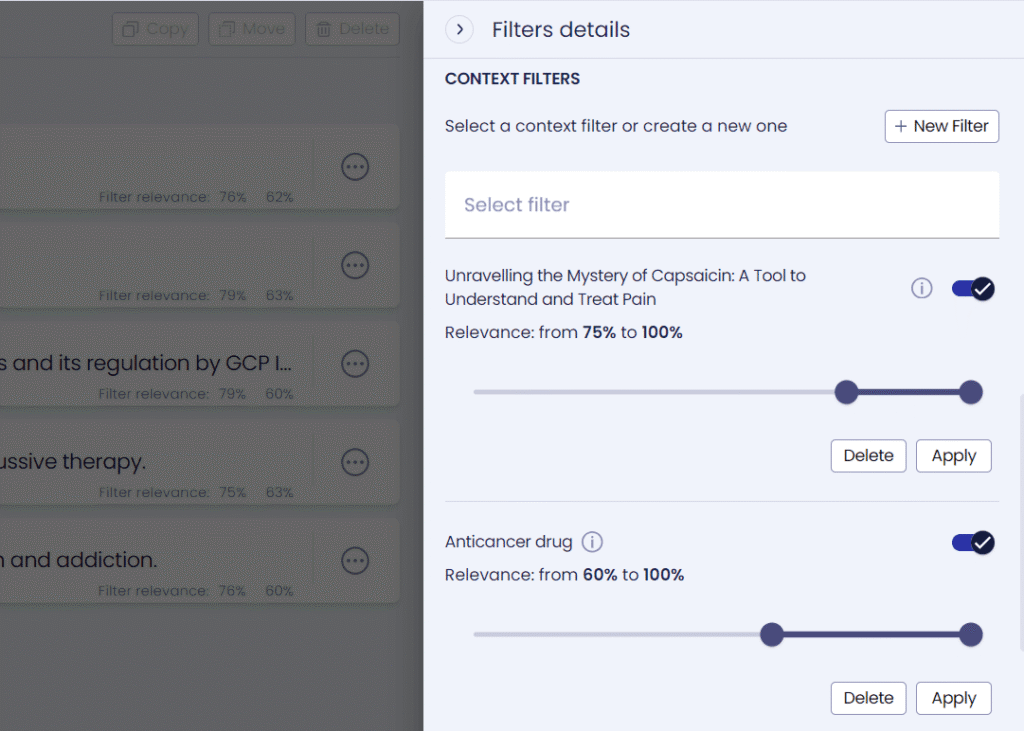

5. Iris.ai Researcher Workspace — Deep Semantic Discovery for Serious Reviews

Iris.ai positions itself as an end‑to‑end research cockpit. Instead of just surfacing PDFs, it builds a semantic map of your topic, extracts data points from each paper, and lets you shape a private knowledge graph.

Main Features — What You Can Do

- Concept‑based smart search — Paste an abstract or problem statement; Iris.ai rewrites it into a mesh of scientific concepts and retrieves papers that share meaning, not just keywords.

- Reading‑list analyser — Drop hundreds of PDFs and receive auto‑generated summaries plus topic clusters, making big corpora digestible.

- Autonomous data extraction — Pull numeric values, chemical formulas, or quoted findings into structured columns for meta‑analysis; high‑accuracy mode handles messy PDF layouts.

- Custom knowledge graphs — Pin key concepts and see how they interlink across papers; perfect for spotting research gaps or conflicting evidence.

- Dataset import — Upload in‑house reports or patents to search alongside public literature, keeping proprietary insight in one workspace.

Quality of the Review — Strengths and Watch‑outs

Users praise Iris.ai for cutting reading time by 50–70 %, thanks to semantic clustering and instant summaries. It shines when terminology varies—say, “gene drive” vs “homing endonuclease”—because concept search still groups them.

Two caveats matter to you:

- Onboarding curve — Building the first knowledge graph takes practice. Expect a few hours of setup before the gains kick in.

- Compute latency — Large batch extractions can feel slow on the free trial servers; enterprise-grade speed sits behind paid tiers.

Pricing — What It Will Cost You

Iris.ai offers a 10‑day free trial of the full workspace. After that, pricing shifts to quotation‑based enterprise or academic site licences; no perpetual free tier exists on the current Researcher Workspace.

Early adopters who pre‑registered kept “preferred prices” estimated around €39–€59 per user per month for small labs, but 2025 plans list custom quotes based on seat count and GPU usage.

6. Scholarcy — One‑Click Summaries and Flashcards for Speed Readers

Scholarcy is the “CliffsNotes” engine for academic papers. Drop a PDF, chapter, or webpage, and it spits out concise flashcards, key facts, figures, and references—ready for rapid review or export to your reference manager.

Main Features — What You Can Do

- AI flashcard summaries — Scholarcy scans each paper, highlights claims, methods, and findings, then converts them into bite‑size cards with clickable links to the original text.

- Literature matrix builder — Paid plans let you stitch multiple summaries into a spreadsheet‑style matrix that shows study design, sample size, outcomes, and more—ideal for systematic reviews.

- Reference & figure extraction — The model pulls cited sources, tables, and important numbers into separate lists so you can track evidence without manual copy‑paste.

- Browser extension & batch upload — Add a button to Chrome or Edge, bulk‑upload dozens of PDFs, and process them in the background while you work.

- Text‑to‑speech and accessibility helpers — Adjustable font, audio playback, and colour themes support diverse reading needs.

Quality of the Review — Strengths and Watch‑outs

Researchers praise Scholarcy for chopping reading time by 40–60 %; the flashcards surface main claims quickly and flag missing data like confidence intervals or p‑values. It excels when you need quick comprehension, teaching aids, or a first pass before deeper critique.

Limits to note: summaries remain surface‑level; you still need to read the original for nuanced methods or complex stats. PDFs with heavy mathematical notation sometimes render garbled equations, so double‑check technical sections. And while extraction accuracy is strong for peer‑reviewed articles, grey literature quality can vary.

Pricing — What It Will Cost You

You get one to three free summaries per day on the basic tier—helpful for light use. The Scholarcy Plus plan starts around $5–$10 per month (or $45–$90 annually, depending on promotion and region) and unlocks unlimited summaries, matrix export, note‑taking, and bulk flashcard downloads. New users receive a seven‑day full‑feature trial to test workload fit. Institutional licences add shared libraries and admin controls via custom quotes.

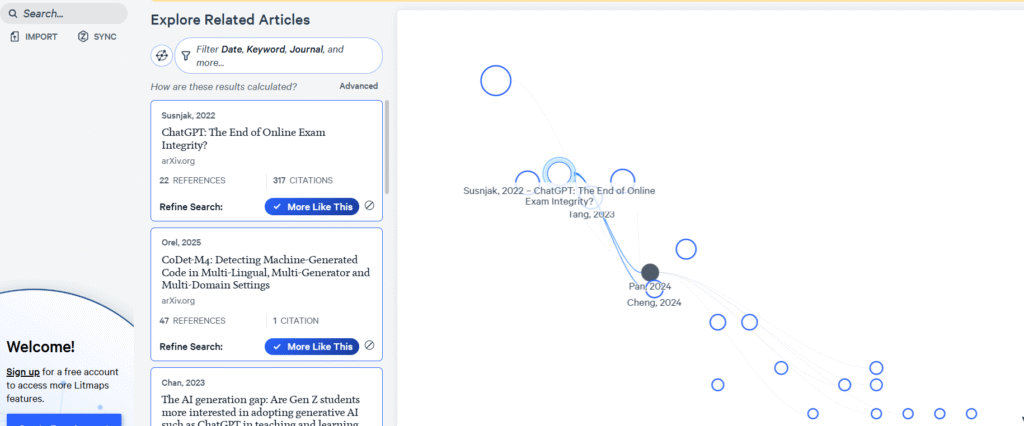

7. Litmaps — Always‑Updating Citation Maps for Living Reviews

Litmaps takes the visual approach of ResearchRabbit and turns it into a full tracking system. You drop a single “seed” paper and the platform draws a map that keeps refreshing as new studies appear.

Main Features — What You Can Do

- Dynamic citation maps — Paste a DOI or upload a RIS file. Litmaps plots every citing and cited paper, then calculates semantic distance so closely related studies cluster together.

- Live alerts baked into maps — Switch on “Auto‑update” and the map adds new nodes the moment they hit Crossref or Semantic Scholar. You stop worrying about missed releases.

- Intersection mode — Combine multiple maps and instantly see the overlap between two sub‑fields—handy when your topic sits at a discipline boundary.

- Focus filters — Hide low‑citation or low‑relevance papers, narrow by year, or spotlight highly connected “hub” articles so your screen stays usable.

- Quick export — With one click, push selected papers to BibTeX, Zotero, or a simple CSV reading list.

Quality of the Review — Strengths and Watch‑outs

Researchers rate Litmaps high for discovery depth. Because the algorithm weighs citation networks over raw keywords, it surfaces relevant but differently phrased papers—a lifesaver in interdisciplinary work. One 2024 review logged a 35 % increase in novel papers found compared with a standard Scopus search.

Yet two cautions apply:

- Seed sensitivity — If your starting paper is fringe or poorly cited, the map can feel sparse. Start with two or three mainstream seeds to stabilise results.

- Citation lag — Very new pre‑prints can take weeks to appear because nodes rely on indexing services. Keep arXiv or medRxiv alerts running in parallel for bleeding‑edge fields.

Pricing — What It Will Cost You

You can build up to two Litmaps and explore 100 articles per map for free—enough for a pilot or a small assignment. The Pro plan bumps you to unlimited maps, unlimited articles, and weekly auto‑alerts for about $10 per month (regional academic discounts slice that by up to 75 %). Enterprise teams negotiate custom licensing

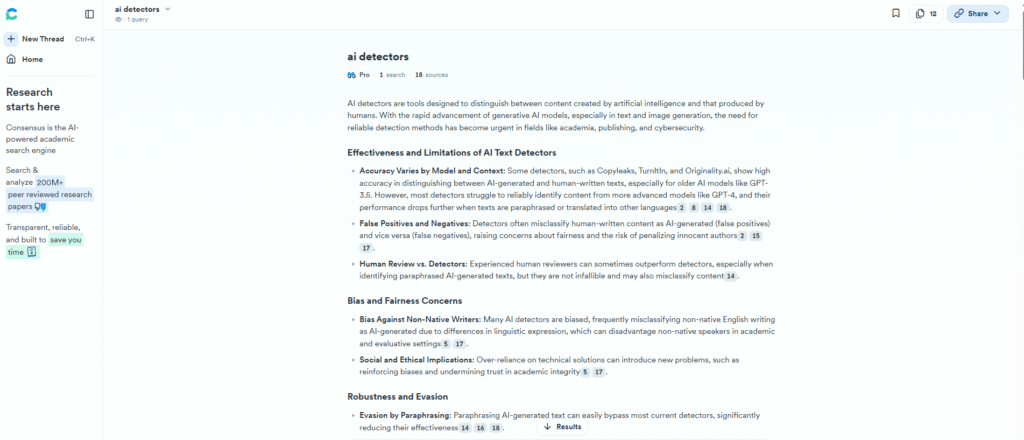

8. Consensus — Evidence‑Based Answers at One Question per Click

Consensus pitches itself as a fact‑checker for science questions. You type a claim such as “Does intermittent fasting reduce inflammation?” and the engine reads over 200 million peer‑reviewed papers, then returns the aggregated answer with strength of evidence and live citations.

Main Features — What You Can Do

- Claim‑based search — Start with a yes/no or comparative question in plain English. Consensus retrieves matching studies, clusters key findings, and shows a verdict bar (agree, disagree, mixed).

- Consensus Meter — A visual gauge that quantifies author agreement, so you see instantly whether the field is united or split.

- Deep Search mode (2025) — Toggle to break your query into twenty targeted sub‑queries, pull 1 000+ papers, and generate a structured mini‑report—perfect for gap spotting.

- Advanced filters — Limit results to RCTs, meta‑analyses, or specific publication years; pin high‑level evidence first and avoid rabbit holes.

- One‑click exports — Push selected studies to BibTeX or Zotero, keeping citations tidy in your write‑up.

Quality of the Review — Strengths and Watch‑outs

Consensus shines when you need a quick, defensible answer. The Claim‑based search and Meter strip away noise and show how confident the community is—saving you hours of manual scanning. Reviewers note that the engine is especially strong in nutrition, psychology, and health sciences, where yes/no debates dominate.

But remember two limits:

- Coverage gaps — While the database now spans 200 million papers, it still trails specialist indexes for very niche or newly posted pre‑prints. Always double‑check PubMed or arXiv if your field moves at warp speed.

- Binary bias — The verdict bar can oversimplify nuanced findings. Mixed evidence often hides methodological differences; you still need to open the cited PDFs before writing your synthesis.

Pricing — What It Will Cost You

Consensus follows a freemium model:

- Free plan — Unlimited basic searches, five Deep Search reports per month, standard export. Good for students or early scoping.

- Pro plan — Roughly $10 per month unlocks unlimited Deep Search, priority API access, and longer PDF previews—handy when you run weekly systematic updates.

- Institutional licences add SSO and usage analytics; pricing is by quote.

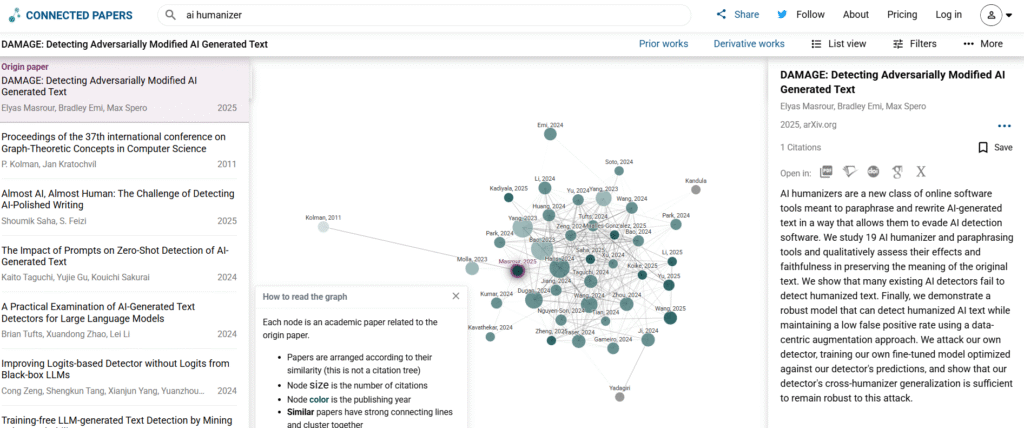

9. Connected Papers — Your Instant Map of Any Research Field

A bit like Research Rabbit, Connected Papers turns one seed article into an interactive graph of related work. In seconds, you see which studies sit at the center of a topic, which ones branch into sub‑themes, and how interest has risen or faded over time.

Main Features — What You Can Do

- Single‑click similarity graphs — Paste a DOI, title, or arXiv link and the tool plots a network of papers ranked by mathematical proximity, not just shared citations.

- Prior & derivative filters — Toggle to show foundational work or the newest follow‑ups, so you spot gaps for future study.

- Year‑slider heat map — Drag a bar to visualise publication peaks and lulls; great for spotting when a field exploded or stalled.

- Graph history and saved papers — Keep a running stack of every map you build, then bookmark must‑read nodes for later.

- Multi‑origin graphs — Start with several seed papers and watch the networks merge; ideal when your review spans overlapping sub‑topics.

These actions let you jump from zero context to a panoramic view of the literature in minutes instead of days.

Quality of the Review — Strengths and Watch‑outs

Users love Connected Papers for discovery. Visual clustering surfaces unexpected links and seminal work you might miss with keyword search. Reviewers rate its interface as “fast and intuitive”, citing a 4.5/5 usability score.

Yet two limits matter to you:

- One‑seed bias — If you rely on a single paper, the graph sometimes drifts toward that author’s niche. Starting with two or three contrasting seeds reduces skew.

- Database coverage — The engine draws on Semantic Scholar. Very new pre‑prints or obscure journals can be absent, so always supplement with PubMed, arXiv, or manual alerts.

Pricing — What It Will Cost You

Connected Papers keeps the entry barrier low. The Free plan lets you build five graphs every month with all core features intact. When your review scales, jump to the Academic plan at $5 per month (billed quarterly) for unlimited graphs, or the Business plan at $15 per month when you’re working in industry. Annual billing cuts those fees roughly in half. Group seats are available for team workflows.

Because graphs refresh instantly, five per month often covers early scoping. Upgrade only when you’re running iterative searches daily.